A Note on Connecting Barlow Twins with Negative-Sample-Free Contrastive Learning

In this report, we relate the algorithmic design of Barlow Twins' method to the Hilbert-Schmidt Independence Criterion (HSIC), thus establishing it as a contrastive learning approach that is free of negative samples. Through this perspective, we argue that Barlow Twins (and thus the class of negative-sample-free contrastive learning methods) suggests a possibility to bridge the two major families of self-supervised learning philosophies: non-contrastive and contrastive approaches. In particular, Barlow twins exemplified how we could combine the best practices of both worlds: avoiding the need of large training batch size and negative sample pairing (like non-contrastive methods) and avoiding symmetry-breaking network designs (like contrastive methods).

PDF Abstract

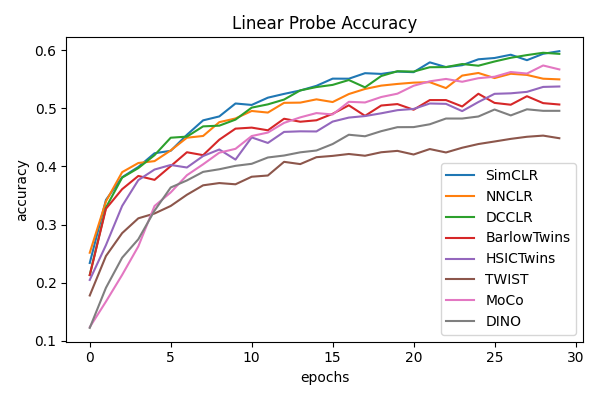

CIFAR-10

CIFAR-10

Tiny ImageNet

Tiny ImageNet