A Simple Baseline for Multi-Camera 3D Object Detection

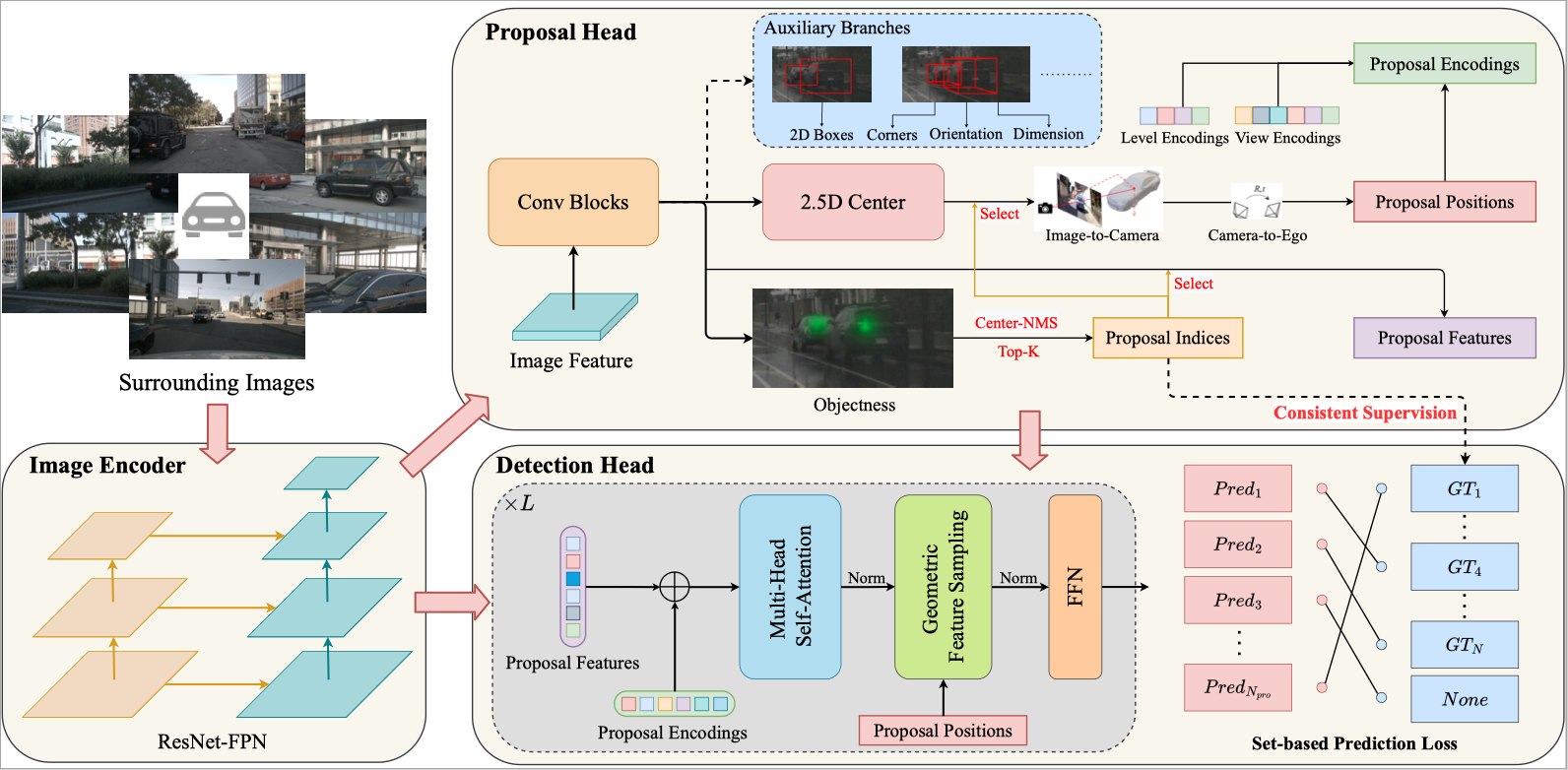

3D object detection with surrounding cameras has been a promising direction for autonomous driving. In this paper, we present SimMOD, a Simple baseline for Multi-camera Object Detection, to solve the problem. To incorporate multi-view information as well as build upon previous efforts on monocular 3D object detection, the framework is built on sample-wise object proposals and designed to work in a two-stage manner. First, we extract multi-scale features and generate the perspective object proposals on each monocular image. Second, the multi-view proposals are aggregated and then iteratively refined with multi-view and multi-scale visual features in the DETR3D-style. The refined proposals are end-to-end decoded into the detection results. To further boost the performance, we incorporate the auxiliary branches alongside the proposal generation to enhance the feature learning. Also, we design the methods of target filtering and teacher forcing to promote the consistency of two-stage training. We conduct extensive experiments on the 3D object detection benchmark of nuScenes to demonstrate the effectiveness of SimMOD and achieve new state-of-the-art performance. Code will be available at https://github.com/zhangyp15/SimMOD.

PDF Abstract

nuScenes

nuScenes