A Robust Volumetric Transformer for Accurate 3D Tumor Segmentation

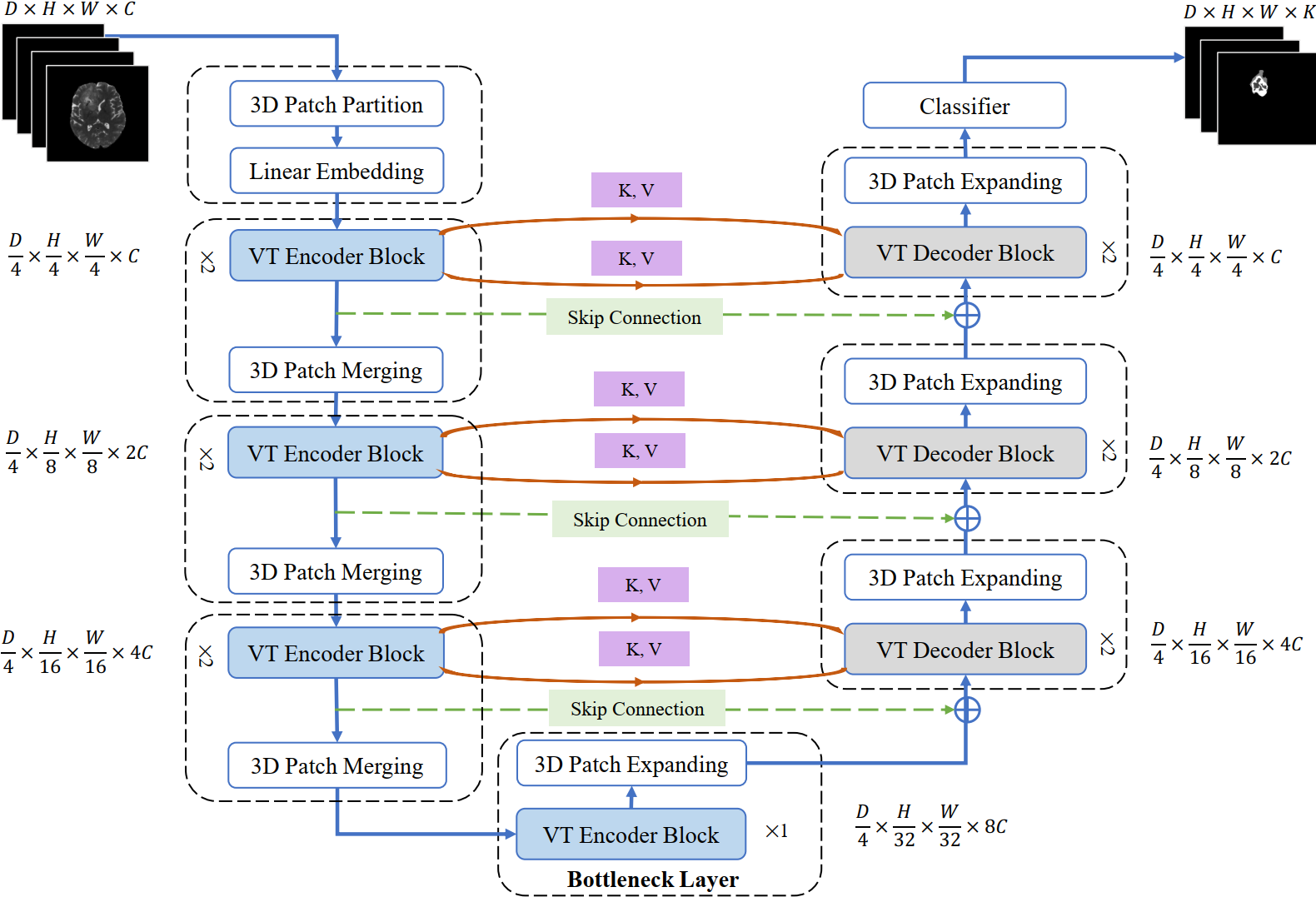

We propose a Transformer architecture for volumetric segmentation, a challenging task that requires keeping a complex balance in encoding local and global spatial cues, and preserving information along all axes of the volume. Encoder of the proposed design benefits from self-attention mechanism to simultaneously encode local and global cues, while the decoder employs a parallel self and cross attention formulation to capture fine details for boundary refinement. Empirically, we show that the proposed design choices result in a computationally efficient model, with competitive and promising results on the Medical Segmentation Decathlon (MSD) brain tumor segmentation (BraTS) Task. We further show that the representations learned by our model are robust against data corruptions. \href{https://github.com/himashi92/VT-UNet}{Our code implementation is publicly available}.

PDF Abstract