A Whac-A-Mole Dilemma: Shortcuts Come in Multiples Where Mitigating One Amplifies Others

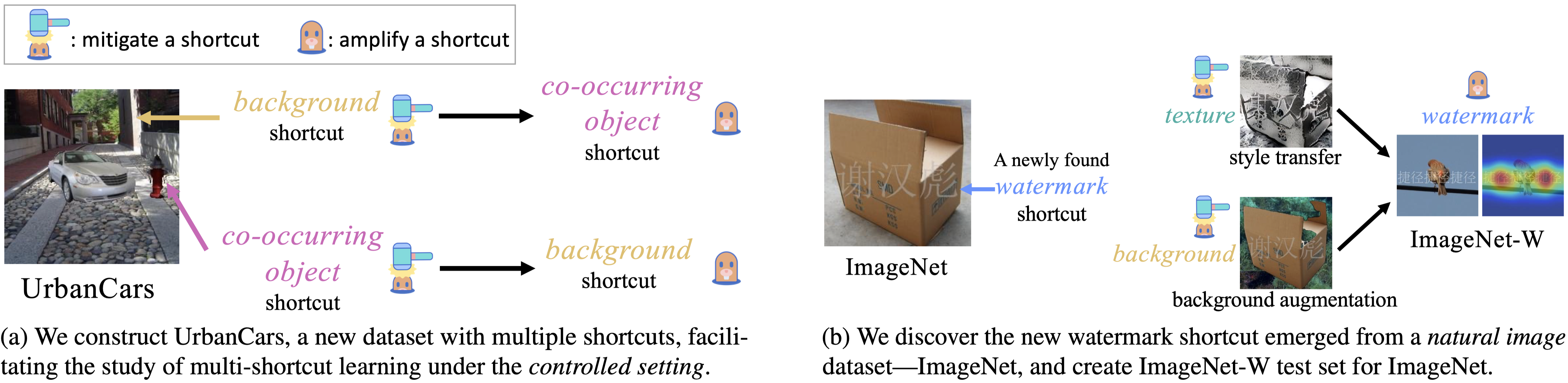

Machine learning models have been found to learn shortcuts -- unintended decision rules that are unable to generalize -- undermining models' reliability. Previous works address this problem under the tenuous assumption that only a single shortcut exists in the training data. Real-world images are rife with multiple visual cues from background to texture. Key to advancing the reliability of vision systems is understanding whether existing methods can overcome multiple shortcuts or struggle in a Whac-A-Mole game, i.e., where mitigating one shortcut amplifies reliance on others. To address this shortcoming, we propose two benchmarks: 1) UrbanCars, a dataset with precisely controlled spurious cues, and 2) ImageNet-W, an evaluation set based on ImageNet for watermark, a shortcut we discovered affects nearly every modern vision model. Along with texture and background, ImageNet-W allows us to study multiple shortcuts emerging from training on natural images. We find computer vision models, including large foundation models -- regardless of training set, architecture, and supervision -- struggle when multiple shortcuts are present. Even methods explicitly designed to combat shortcuts struggle in a Whac-A-Mole dilemma. To tackle this challenge, we propose Last Layer Ensemble, a simple-yet-effective method to mitigate multiple shortcuts without Whac-A-Mole behavior. Our results surface multi-shortcut mitigation as an overlooked challenge critical to advancing the reliability of vision systems. The datasets and code are released: https://github.com/facebookresearch/Whac-A-Mole.

PDF Abstract CVPR 2023 PDF CVPR 2023 AbstractDatasets

Introduced in the Paper:

ImageNet-W

ImageNet-W

UrbanCars

UrbanCars

Used in the Paper:

ImageNet

ImageNet

ImageNet-R

ImageNet-R

ImageNet-A

ImageNet-A

ImageNet-Sketch

ImageNet-Sketch

ObjectNet

ObjectNet

Stylized ImageNet

ImageNet-9

Stylized ImageNet

ImageNet-9