Abstractive Text Classification Using Sequence-to-convolution Neural Networks

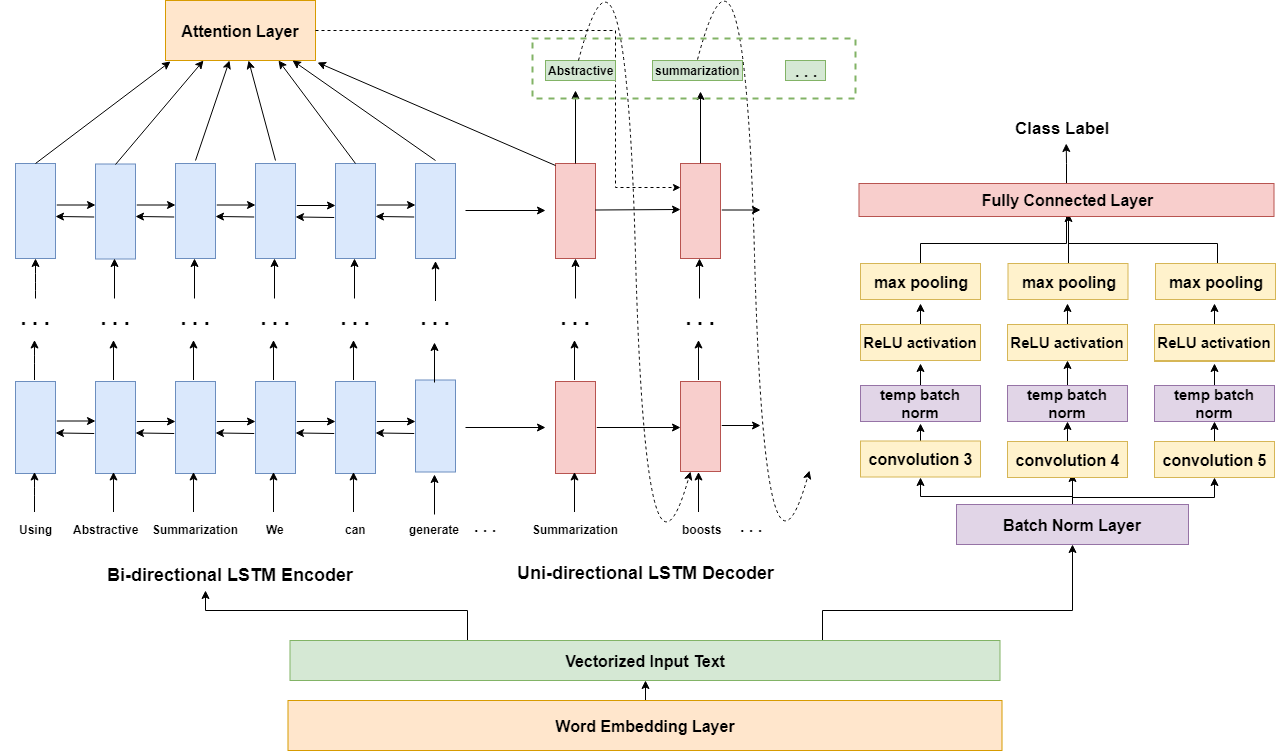

We propose a new deep neural network model and its training scheme for text classification. Our model Sequence-to-convolution Neural Networks(Seq2CNN) consists of two blocks: Sequential Block that summarizes input texts and Convolution Block that receives summary of input and classifies it to a label. Seq2CNN is trained end-to-end to classify various-length texts without preprocessing inputs into fixed length. We also present Gradual Weight Shift(GWS) method that stabilizes training. GWS is applied to our model's loss function. We compared our model with word-based TextCNN trained with different data preprocessing methods. We obtained significant improvement in classification accuracy over word-based TextCNN without any ensemble or data augmentation.

PDF Abstract

AG News

AG News

DBpedia

DBpedia