Accelerated Bayesian inference using deep learning

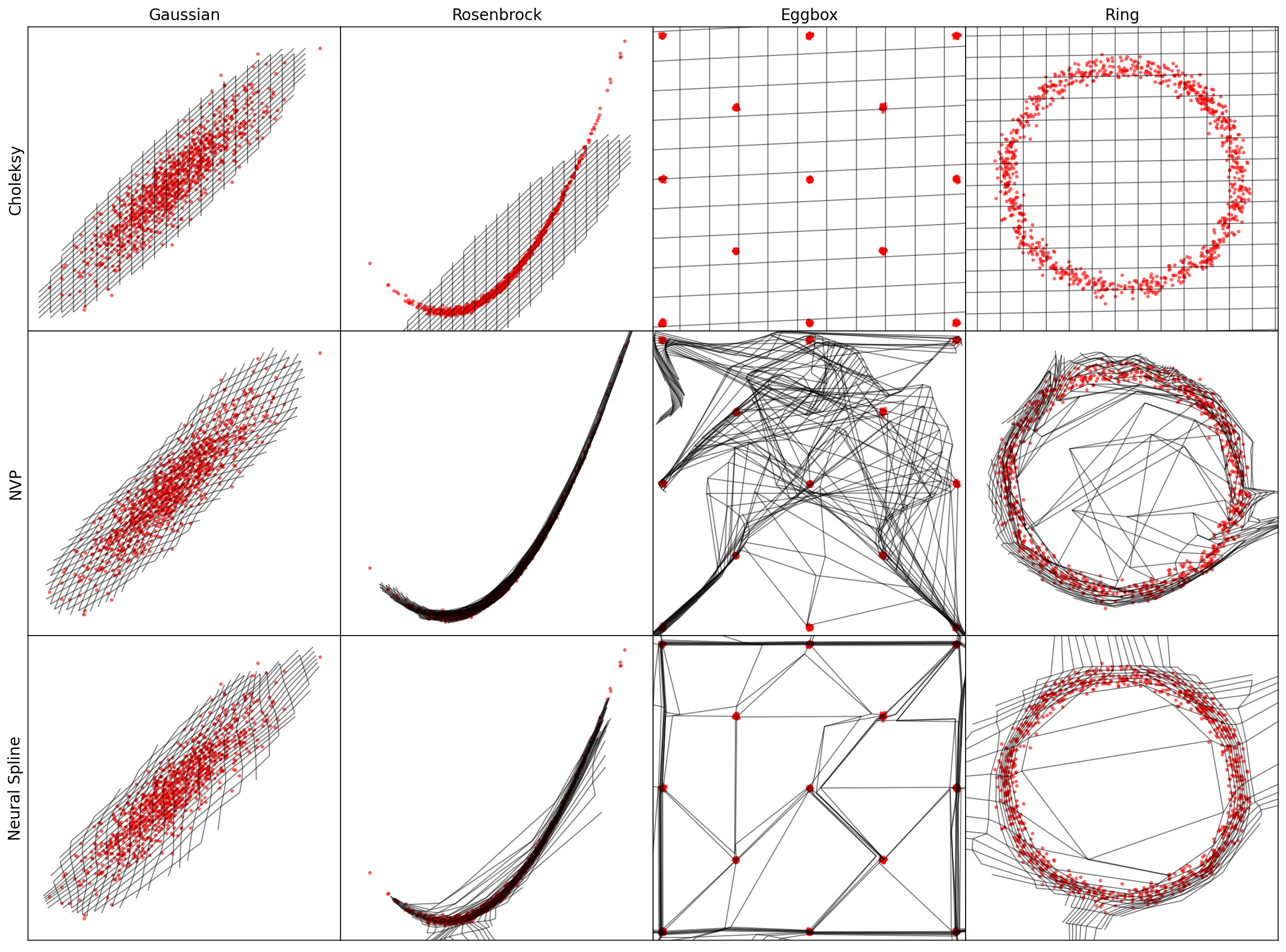

We present a novel Bayesian inference tool that uses a neural network to parameterise efficient Markov Chain Monte-Carlo (MCMC) proposals. The target distribution is first transformed into a diagonal, unit variance Gaussian by a series of non-linear, invertible, and non-volume preserving flows. Neural networks are extremely expressive, and can transform complex targets to a simple latent representation. Efficient proposals can then be made in this space, and we demonstrate a high degree of mixing on several challenging distributions. Parameter space can naturally be split into a block diagonal speed hierarchy, allowing for fast exploration of subspaces where it is inexpensive to evaluate the likelihood. Using this method, we develop a nested MCMC sampler to perform Bayesian inference and model comparison, finding excellent performance on highly curved and multi-modal analytic likelihoods. We also test it on {\em Planck} 2015 data, showing accurate parameter constraints, and calculate the evidence for simple one-parameter extensions to LCDM in $\sim20$ dimensional parameter space. Our method has wide applicability to a range of problems in astronomy and cosmology.

PDF Abstract