Bandit-Based Monte Carlo Optimization for Nearest Neighbors

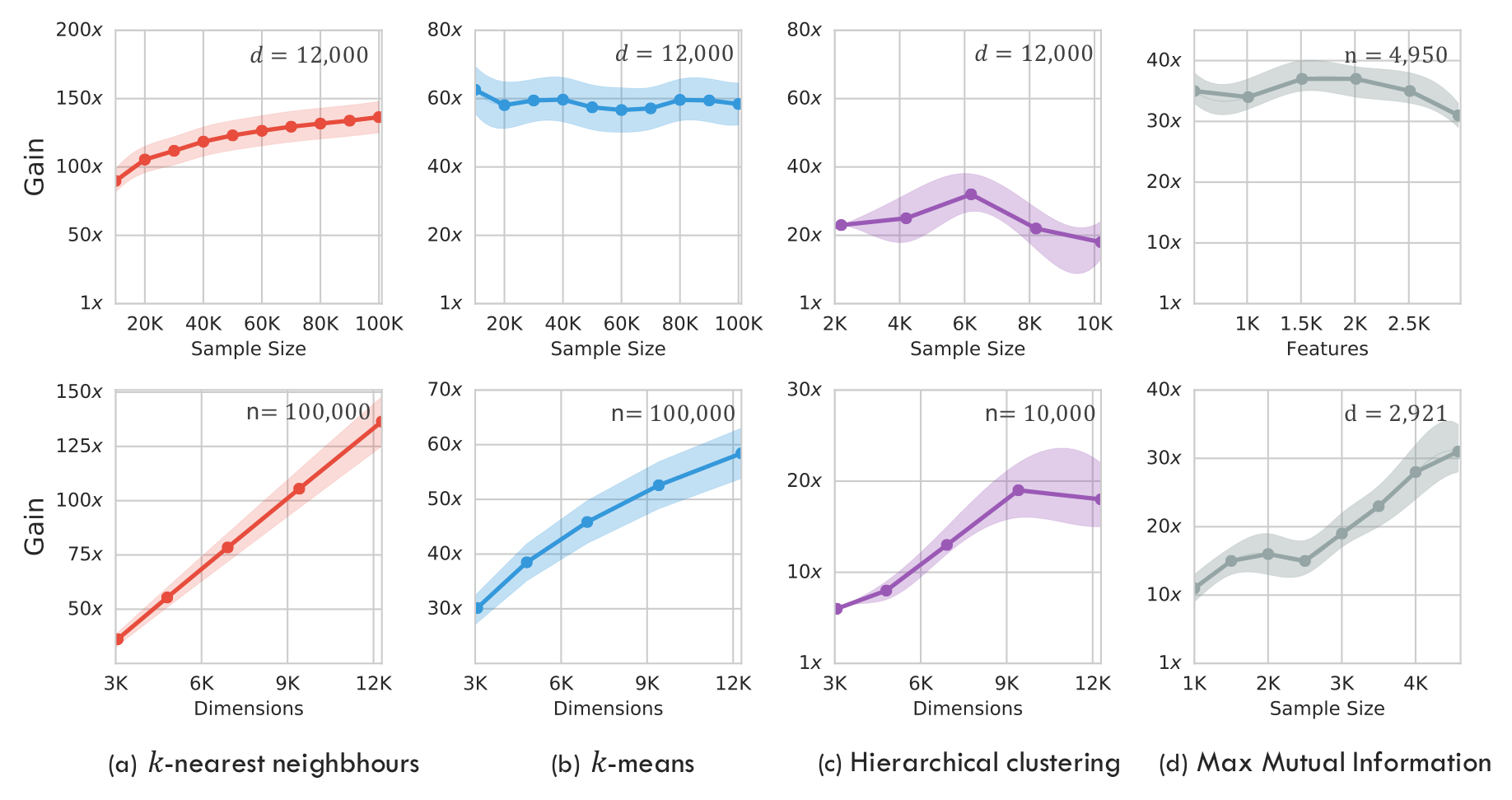

The celebrated Monte Carlo method estimates an expensive-to-compute quantity by random sampling. Bandit-based Monte Carlo optimization is a general technique for computing the minimum of many such expensive-to-compute quantities by adaptive random sampling. The technique converts an optimization problem into a statistical estimation problem which is then solved via multi-armed bandits. We apply this technique to solve the problem of high-dimensional $k$-nearest neighbors, developing an algorithm which we prove is able to identify exact nearest neighbors with high probability. We show that under regularity assumptions on a dataset of $n$ points in $d$-dimensional space, the complexity of our algorithm scales logarithmically with the dimension of the data as $O\left((n+d)\log^2 \left(\frac{nd}{\delta}\right)\right)$ for error probability $\delta$, rather than linearly as in exact computation requiring $O(nd)$. We corroborate our theoretical results with numerical simulations, showing that our algorithm outperforms both exact computation and state-of-the-art algorithms such as kGraph, NGT, and LSH on real datasets.

PDF Abstract