AdvFaces: Adversarial Face Synthesis

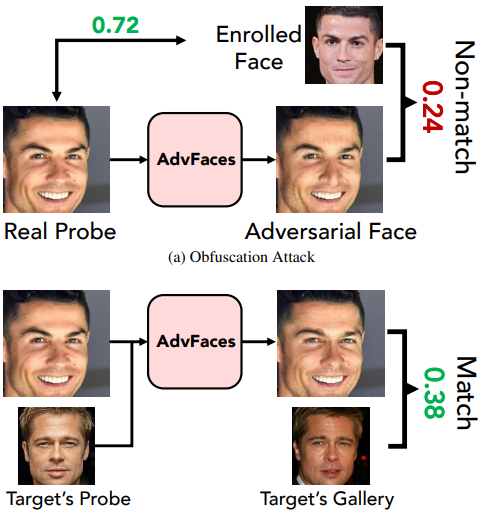

Face recognition systems have been shown to be vulnerable to adversarial examples resulting from adding small perturbations to probe images. Such adversarial images can lead state-of-the-art face recognition systems to falsely reject a genuine subject (obfuscation attack) or falsely match to an impostor (impersonation attack). Current approaches to crafting adversarial face images lack perceptual quality and take an unreasonable amount of time to generate them. We propose, AdvFaces, an automated adversarial face synthesis method that learns to generate minimal perturbations in the salient facial regions via Generative Adversarial Networks. Once AdvFaces is trained, it can automatically generate imperceptible perturbations that can evade state-of-the-art face matchers with attack success rates as high as 97.22% and 24.30% for obfuscation and impersonation attacks, respectively.

PDF Abstract

CASIA-WebFace

CASIA-WebFace