Markdown

[](https://paperswithcode.com/sota/natural-language-inference-on-qnli?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/semantic-textual-similarity-on-mrpc?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/question-answering-on-quora-question-pairs?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/question-answering-on-squad20-dev?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/semantic-textual-similarity-on-sts-benchmark?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/natural-language-inference-on-multinli?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/sentiment-analysis-on-sst-2-binary?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/natural-language-inference-on-wnli?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/multimodal-intent-recognition-on-photochat?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/common-sense-reasoning-on-commonsenseqa?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/linguistic-acceptability-on-cola?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/natural-language-inference-on-rte?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/question-answering-on-squad20?p=albert-a-lite-bert-for-self-supervised)

[](https://paperswithcode.com/sota/multi-task-language-understanding-on-mmlu?p=albert-a-lite-bert-for-self-supervised)

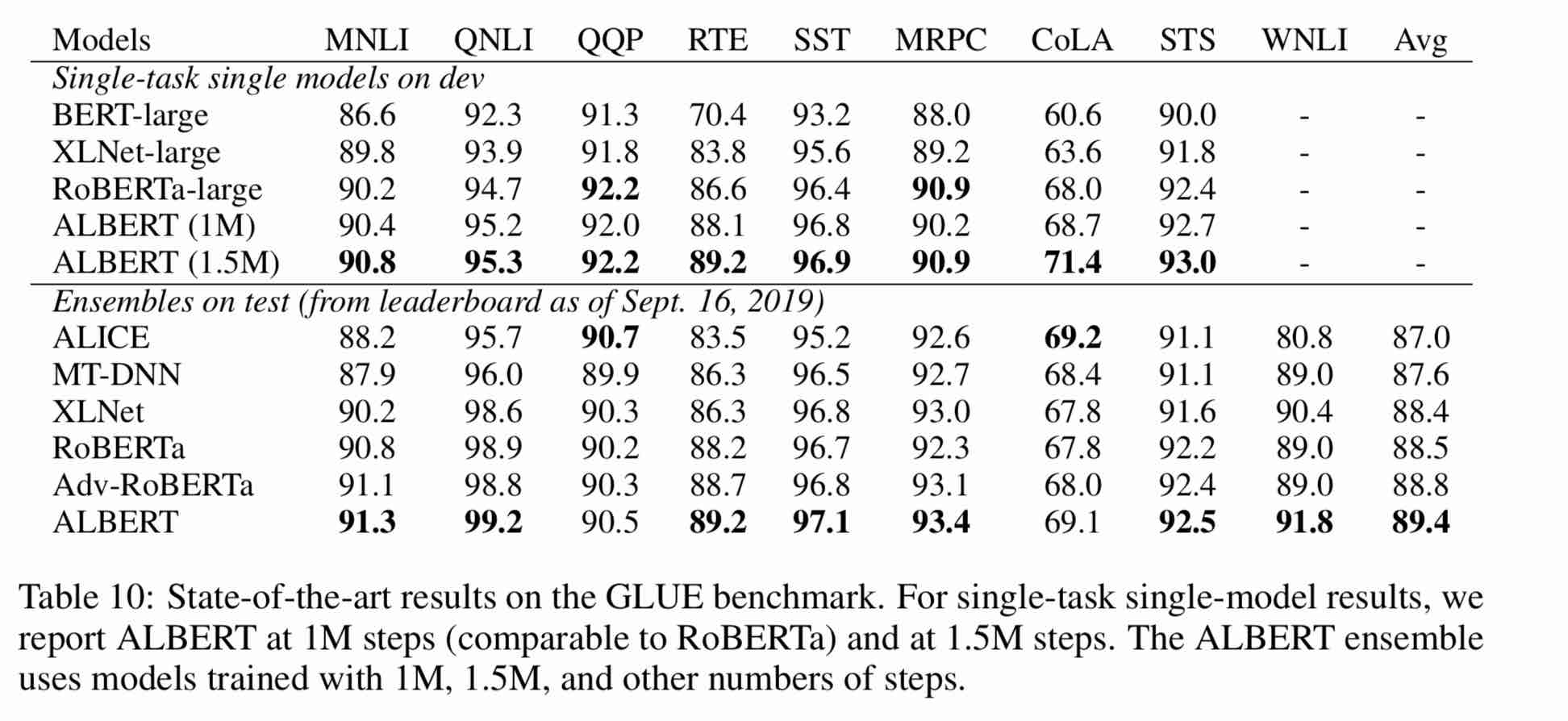

GLUE

GLUE

SST

SST

SQuAD

SQuAD

MultiNLI

MultiNLI

QNLI

QNLI

MRPC

MRPC

MMLU

MMLU

CoLA

CoLA

RACE

RACE

CommonsenseQA

CommonsenseQA

Quora

Quora

Quora Question Pairs

Quora Question Pairs

PhotoChat

PhotoChat