An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator

Generative Adversarial Networks (GANs) have emerged as useful generative models, which are capable of implicitly learning data distributions of arbitrarily complex dimensions. However, the training of GANs is empirically well-known for being highly unstable and sensitive. The loss functions of both the discriminator and generator concerning their parameters tend to oscillate wildly during training. Different loss functions have been proposed to stabilize the training and improve the quality of images generated. In this paper, we perform an empirical study on the impact of several loss functions on the performance of standard GAN models, Deep Convolutional Generative Adversarial Networks (DCGANs). We introduce a new improvement that employs a relativistic discriminator to replace the classical deterministic discriminator in DCGANs and implement a margin cosine loss function for both the generator and discriminator. This results in a novel loss function, namely Relativistic Margin Cosine Loss (RMCosGAN). We carry out extensive experiments with four datasets: CIFAR-$10$, MNIST, STL-$10$, and CAT. We compare RMCosGAN performance with existing loss functions based on two metrics: Frechet inception distance and inception score. The experimental results show that RMCosGAN outperforms the existing ones and significantly improves the quality of images generated.

PDF Abstract

CIFAR-10

CIFAR-10

MNIST

MNIST

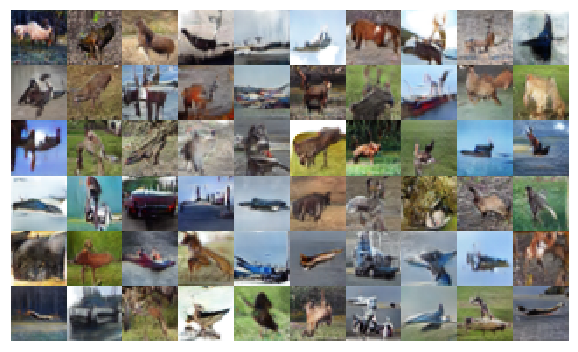

STL-10

STL-10