Appearance-based Gaze Estimation With Deep Learning: A Review and Benchmark

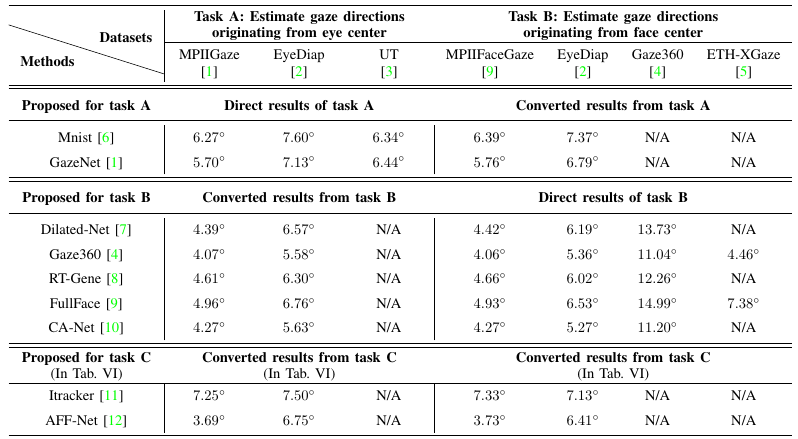

Gaze estimation reveals where a person is looking. It is an important clue for understanding human intention. The recent development of deep learning has revolutionized many computer vision tasks, the appearance-based gaze estimation is no exception. However, it lacks a guideline for designing deep learning algorithms for gaze estimation tasks. In this paper, we present a comprehensive review of the appearance-based gaze estimation methods with deep learning. We summarize the processing pipeline and discuss these methods from four perspectives: deep feature extraction, deep neural network architecture design, personal calibration as well as device and platform. Since the data pre-processing and post-processing methods are crucial for gaze estimation, we also survey face/eye detection method, data rectification method, 2D/3D gaze conversion method, and gaze origin conversion method. To fairly compare the performance of various gaze estimation approaches, we characterize all the publicly available gaze estimation datasets and collect the code of typical gaze estimation algorithms. We implement these codes and set up a benchmark of converting the results of different methods into the same evaluation metrics. This paper not only serves as a reference to develop deep learning-based gaze estimation methods but also a guideline for future gaze estimation research. Implemented methods and data processing codes are available at http://phi-ai.org/GazeHub.

PDF Abstract

MPIIGaze

MPIIGaze

Gaze360

Gaze360

GazeCapture

GazeCapture

EYEDIAP

EYEDIAP

EVE

EVE