Are you tough enough? Framework for Robustness Validation of Machine Comprehension Systems

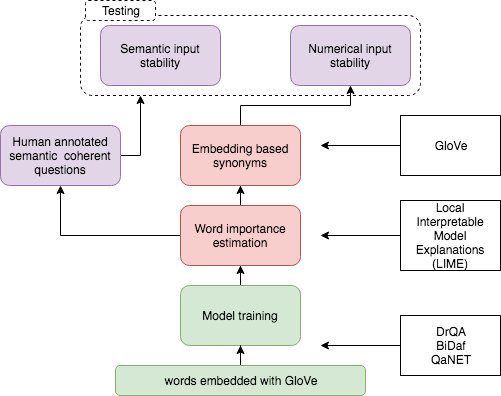

Deep Learning NLP domain lacks procedures for the analysis of model robustness. In this paper we propose a framework which validates robustness of any Question Answering model through model explainers. We propose that a robust model should transgress the initial notion of semantic similarity induced by word embeddings to learn a more human-like understanding of meaning. We test this property by manipulating questions in two ways: swapping important question word for 1) its semantically correct synonym and 2) for word vector that is close in embedding space. We estimate importance of words in asked questions with Locally Interpretable Model Agnostic Explanations method (LIME). With these two steps we compare state-of-the-art Q&A models. We show that although accuracy of state-of-the-art models is high, they are very fragile to changes in the input. Moreover, we propose 2 adversarial training scenarios which raise model sensitivity to true synonyms by up to 7% accuracy measure. Our findings help to understand which models are more stable and how they can be improved. In addition, we have created and published a new dataset that may be used for validation of robustness of a Q&A model.

PDF Abstract