Attract, Perturb, and Explore: Learning a Feature Alignment Network for Semi-supervised Domain Adaptation

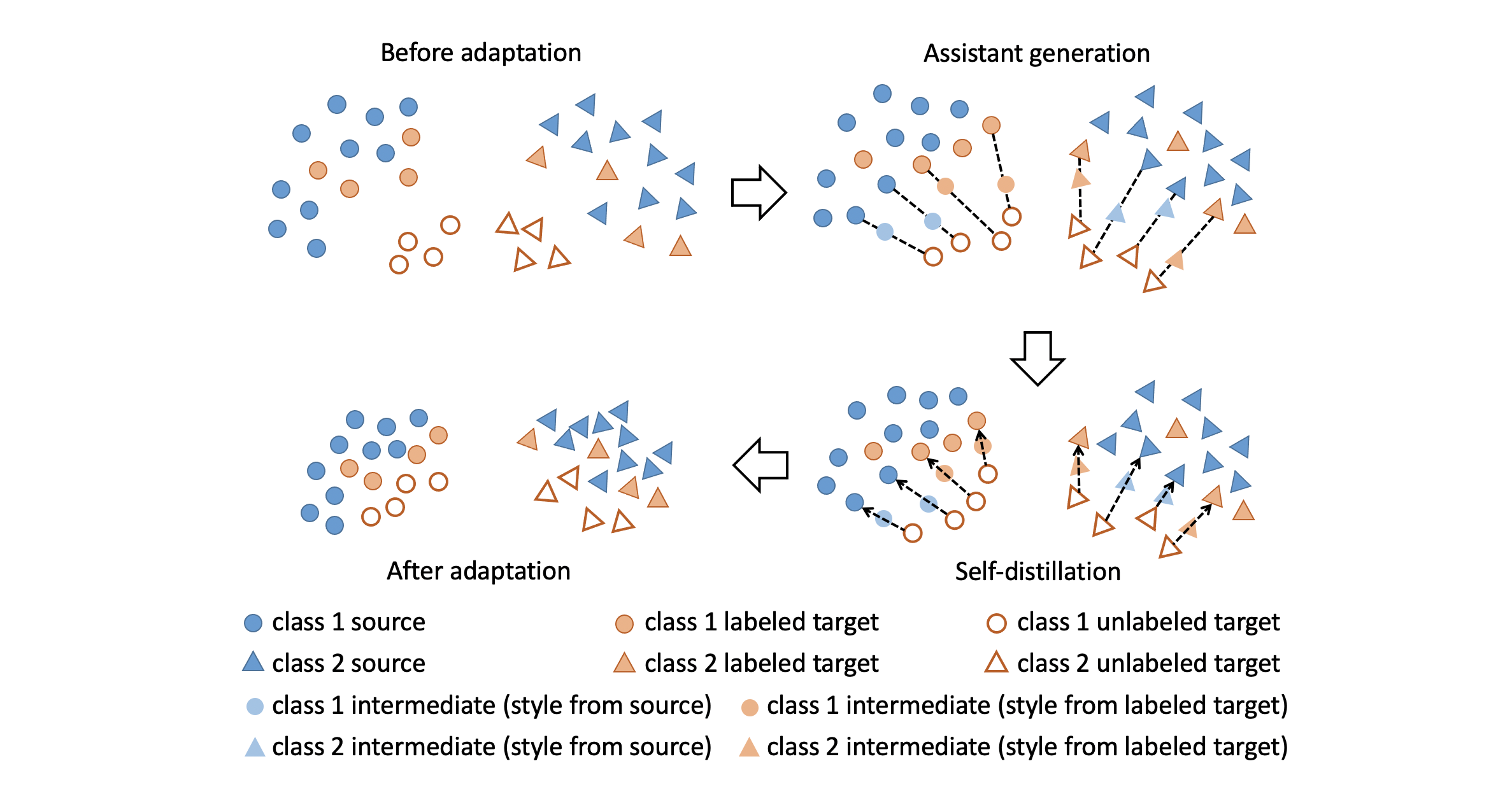

Although unsupervised domain adaptation methods have been widely adopted across several computer vision tasks, it is more desirable if we can exploit a few labeled data from new domains encountered in a real application. The novel setting of the semi-supervised domain adaptation (SSDA) problem shares the challenges with the domain adaptation problem and the semi-supervised learning problem. However, a recent study shows that conventional domain adaptation and semi-supervised learning methods often result in less effective or negative transfer in the SSDA problem. In order to interpret the observation and address the SSDA problem, in this paper, we raise the intra-domain discrepancy issue within the target domain, which has never been discussed so far. Then, we demonstrate that addressing the intra-domain discrepancy leads to the ultimate goal of the SSDA problem. We propose an SSDA framework that aims to align features via alleviation of the intra-domain discrepancy. Our framework mainly consists of three schemes, i.e., attraction, perturbation, and exploration. First, the attraction scheme globally minimizes the intra-domain discrepancy within the target domain. Second, we demonstrate the incompatibility of the conventional adversarial perturbation methods with SSDA. Then, we present a domain adaptive adversarial perturbation scheme, which perturbs the given target samples in a way that reduces the intra-domain discrepancy. Finally, the exploration scheme locally aligns features in a class-wise manner complementary to the attraction scheme by selectively aligning unlabeled target features complementary to the perturbation scheme. We conduct extensive experiments on domain adaptation benchmark datasets such as DomainNet, Office-Home, and Office. Our method achieves state-of-the-art performances on all datasets.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

Office-Home

Office-Home

DomainNet

DomainNet