Speech Denoising by Accumulating Per-Frequency Modeling Fluctuations

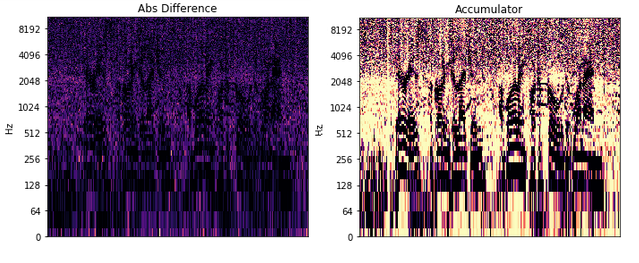

We present a method for audio denoising that combines processing done in both the time domain and the time-frequency domain. Given a noisy audio clip, the method trains a deep neural network to fit this signal. Since the fitting is only partly successful and is able to better capture the underlying clean signal than the noise, the output of the network helps to disentangle the clean audio from the rest of the signal. This is done by accumulating a fitting score per time-frequency bin and applying the time-frequency domain filtering based on the obtained scores. The method is completely unsupervised and only trains on the specific audio clip that is being denoised. Our experiments demonstrate favorable performance in comparison to the literature methods. Our code and samples are available at github.com/mosheman5/DNP and as supplementary. Index Terms: Audio denoising; Unsupervised learning

PDF Abstract