Auto-Exposure Fusion for Single-Image Shadow Removal

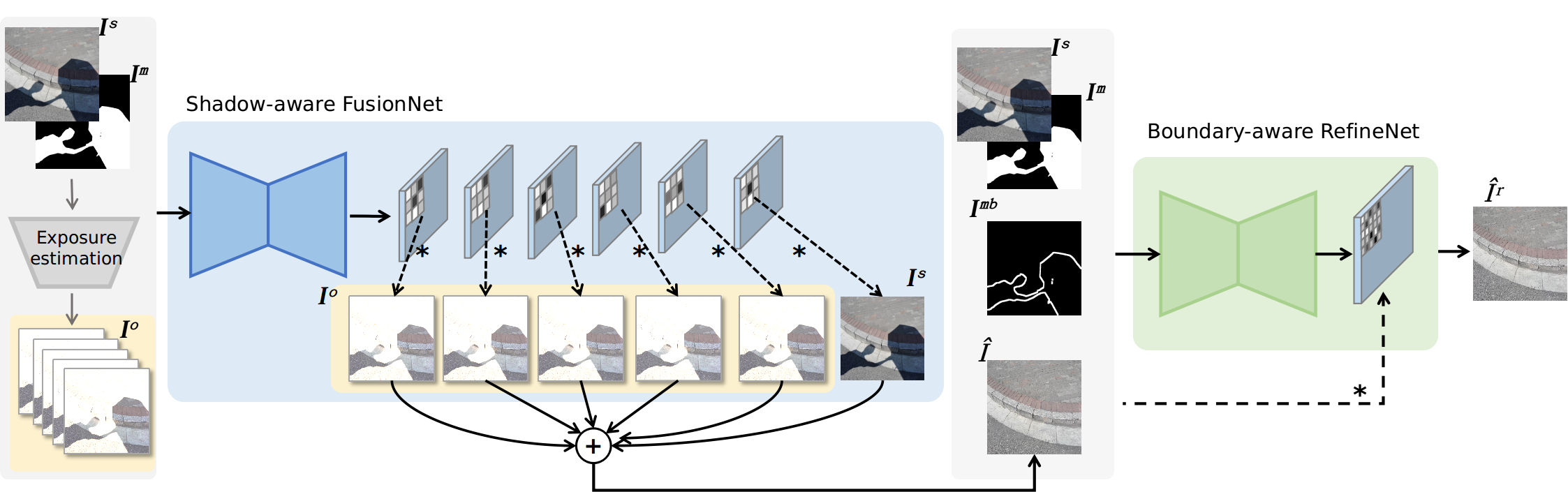

Shadow removal is still a challenging task due to its inherent background-dependent and spatial-variant properties, leading to unknown and diverse shadow patterns. Even powerful state-of-the-art deep neural networks could hardly recover traceless shadow-removed background. This paper proposes a new solution for this task by formulating it as an exposure fusion problem to address the challenges. Intuitively, we can first estimate multiple over-exposure images w.r.t. the input image to let the shadow regions in these images have the same color with shadow-free areas in the input image. Then, we fuse the original input with the over-exposure images to generate the final shadow-free counterpart. Nevertheless, the spatial-variant property of the shadow requires the fusion to be sufficiently `smart', that is, it should automatically select proper over-exposure pixels from different images to make the final output natural. To address this challenge, we propose the shadow-aware FusionNet that takes the shadow image as input to generate fusion weight maps across all the over-exposure images. Moreover, we propose the boundary-aware RefineNet to eliminate the remaining shadow trace further. We conduct extensive experiments on the ISTD, ISTD+, and SRD datasets to validate our method's effectiveness and show better performance in shadow regions and comparable performance in non-shadow regions over the state-of-the-art methods. We release the model and code in https://github.com/tsingqguo/exposure-fusion-shadow-removal.

PDF Abstract CVPR 2021 PDF CVPR 2021 Abstract

ISTD

ISTD