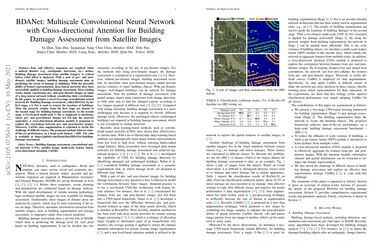

BDANet: Multiscale Convolutional Neural Network with Cross-directional Attention for Building Damage Assessment from Satellite Images

Fast and effective responses are required when a natural disaster (e.g., earthquake, hurricane, etc.) strikes. Building damage assessment from satellite imagery is critical before relief effort is deployed. With a pair of pre- and post-disaster satellite images, building damage assessment aims at predicting the extent of damage to buildings. With the powerful ability of feature representation, deep neural networks have been successfully applied to building damage assessment. Most existing works simply concatenate pre- and post-disaster images as input of a deep neural network without considering their correlations. In this paper, we propose a novel two-stage convolutional neural network for Building Damage Assessment, called BDANet. In the first stage, a U-Net is used to extract the locations of buildings. Then the network weights from the first stage are shared in the second stage for building damage assessment. In the second stage, a two-branch multi-scale U-Net is employed as backbone, where pre- and post-disaster images are fed into the network separately. A cross-directional attention module is proposed to explore the correlations between pre- and post-disaster images. Moreover, CutMix data augmentation is exploited to tackle the challenge of difficult classes. The proposed method achieves state-of-the-art performance on a large-scale dataset -- xBD. The code is available at https://github.com/ShaneShen/BDANet-Building-Damage-Assessment.

PDF Abstract| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| 2D Semantic Segmentation | xBD | BDANet | Weighted Average F1-score | 0.806 | # 2 |

xBD

xBD