Body Meshes as Points

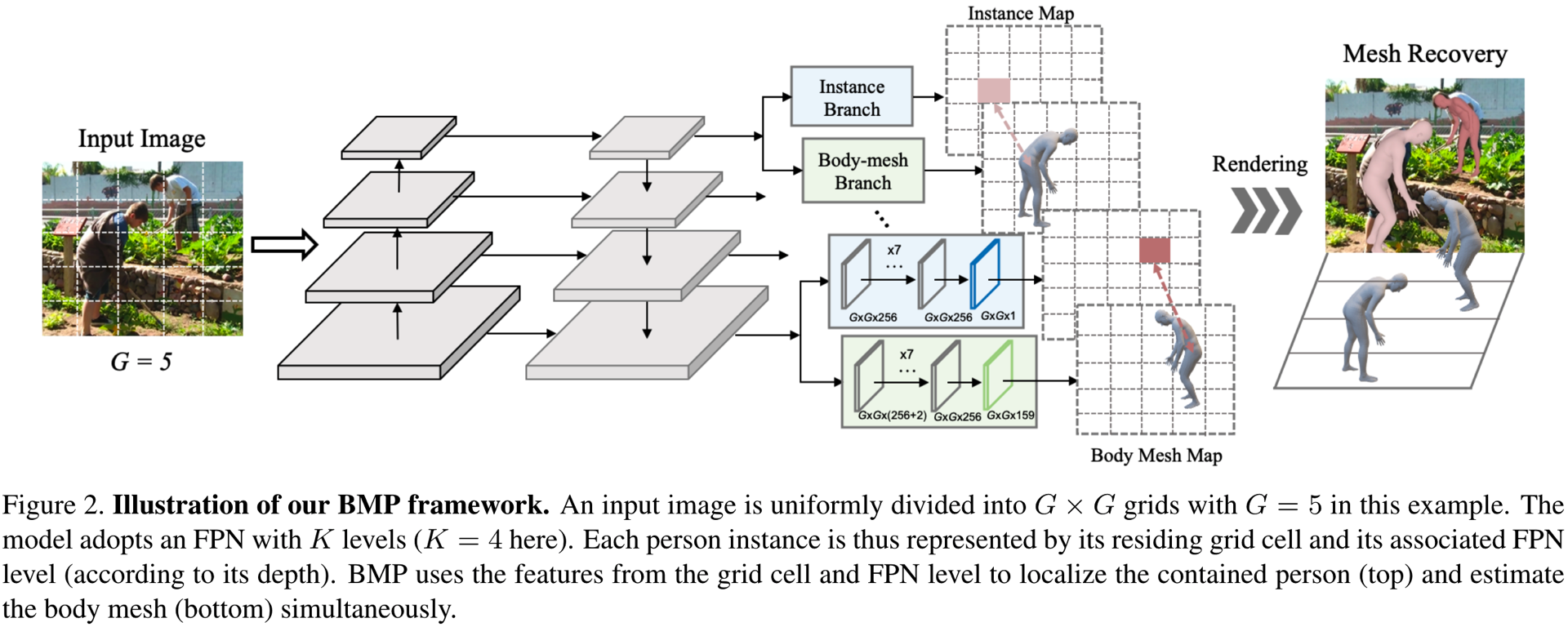

We consider the challenging multi-person 3D body mesh estimation task in this work. Existing methods are mostly two-stage based--one stage for person localization and the other stage for individual body mesh estimation, leading to redundant pipelines with high computation cost and degraded performance for complex scenes (e.g., occluded person instances). In this work, we present a single-stage model, Body Meshes as Points (BMP), to simplify the pipeline and lift both efficiency and performance. In particular, BMP adopts a new method that represents multiple person instances as points in the spatial-depth space where each point is associated with one body mesh. Hinging on such representations, BMP can directly predict body meshes for multiple persons in a single stage by concurrently localizing person instance points and estimating the corresponding body meshes. To better reason about depth ordering of all the persons within the same scene, BMP designs a simple yet effective inter-instance ordinal depth loss to obtain depth-coherent body mesh estimation. BMP also introduces a novel keypoint-aware augmentation to enhance model robustness to occluded person instances. Comprehensive experiments on benchmarks Panoptic, MuPoTS-3D and 3DPW clearly demonstrate the state-of-the-art efficiency of BMP for multi-person body mesh estimation, together with outstanding accuracy. Code can be found at: https://github.com/jfzhang95/BMP.

PDF Abstract CVPR 2021 PDF CVPR 2021 Abstract

MS COCO

MS COCO

Human3.6M

Human3.6M

MPII

MPII

3DPW

3DPW

Panoptic

Panoptic

PoseTrack

PoseTrack

MuPoTS-3D

MuPoTS-3D