Channel-wise Subband Input for Better Voice and Accompaniment Separation on High Resolution Music

This paper presents a new input format, channel-wise subband input (CWS), for convolutional neural networks (CNN) based music source separation (MSS) models in the frequency domain. We aim to address the major issues in CNN-based high-resolution MSS model: high computational cost and weight sharing between distinctly different bands. Specifically, in this paper, we decompose the input mixture spectra into several bands and concatenate them channel-wise as the model input. The proposed approach enables effective weight sharing in each subband and introduces more flexibility between channels. For comparison purposes, we perform voice and accompaniment separation (VAS) on models with different scales, architectures, and CWS settings. Experiments show that the CWS input is beneficial in many aspects. We evaluate our method on musdb18hq test set, focusing on SDR, SIR and SAR metrics. Among all our experiments, CWS enables models to obtain 6.9% performance gain on the average metrics. With even a smaller number of parameters, less training data, and shorter training time, our MDenseNet with 8-bands CWS input still surpasses the original MMDenseNet with a large margin. Moreover, CWS also reduces computational cost and training time to a large extent.

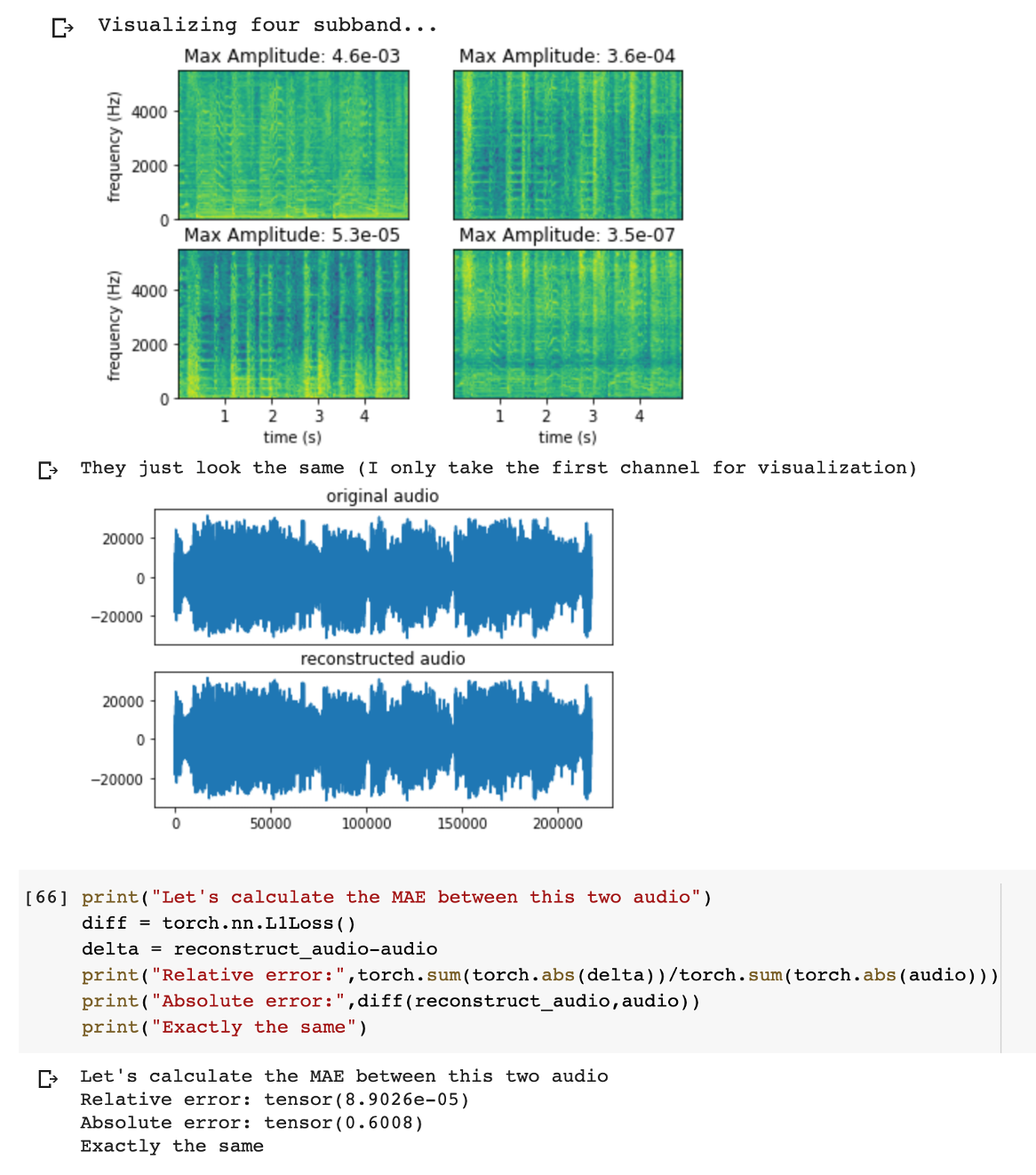

PDF Abstract