Two-Stage Peer-Regularized Feature Recombination for Arbitrary Image Style Transfer

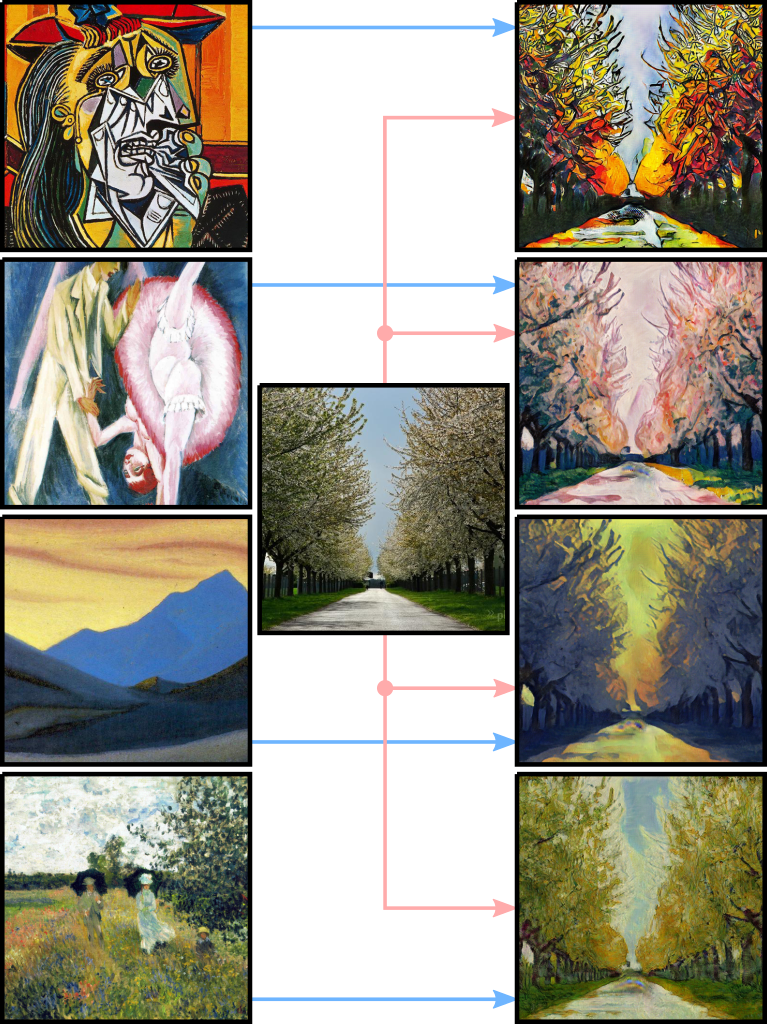

This paper introduces a neural style transfer model to generate a stylized image conditioning on a set of examples describing the desired style. The proposed solution produces high-quality images even in the zero-shot setting and allows for more freedom in changes to the content geometry. This is made possible by introducing a novel Two-Stage Peer-Regularization Layer that recombines style and content in latent space by means of a custom graph convolutional layer. Contrary to the vast majority of existing solutions, our model does not depend on any pre-trained networks for computing perceptual losses and can be trained fully end-to-end thanks to a new set of cyclic losses that operate directly in latent space and not on the RGB images. An extensive ablation study confirms the usefulness of the proposed losses and of the Two-Stage Peer-Regularization Layer, with qualitative results that are competitive with respect to the current state of the art using a single model for all presented styles. This opens the door to more abstract and artistic neural image generation scenarios, along with simpler deployment of the model.

PDF Abstract CVPR 2020 PDF CVPR 2020 Abstract