Consistency-preserving Visual Question Answering in Medical Imaging

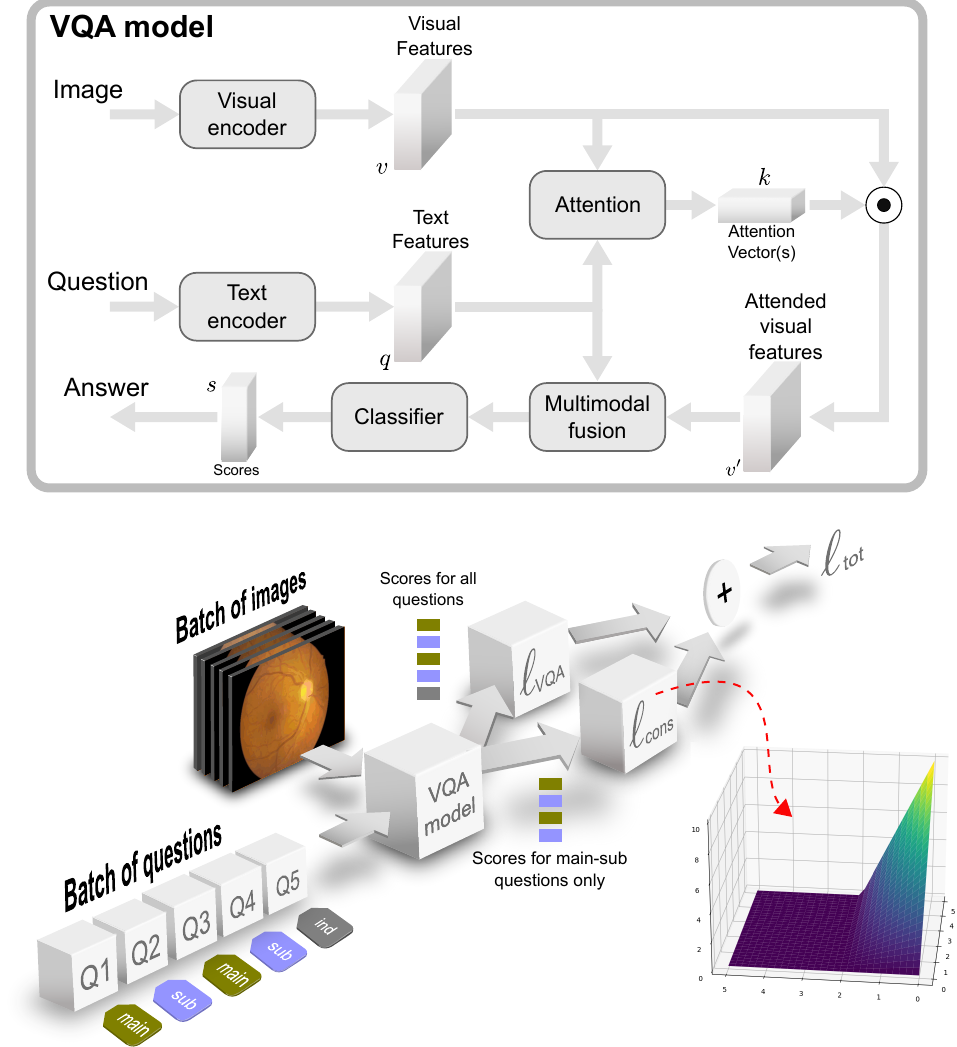

Visual Question Answering (VQA) models take an image and a natural-language question as input and infer the answer to the question. Recently, VQA systems in medical imaging have gained popularity thanks to potential advantages such as patient engagement and second opinions for clinicians. While most research efforts have been focused on improving architectures and overcoming data-related limitations, answer consistency has been overlooked even though it plays a critical role in establishing trustworthy models. In this work, we propose a novel loss function and corresponding training procedure that allows the inclusion of relations between questions into the training process. Specifically, we consider the case where implications between perception and reasoning questions are known a-priori. To show the benefits of our approach, we evaluate it on the clinically relevant task of Diabetic Macular Edema (DME) staging from fundus imaging. Our experiments show that our method outperforms state-of-the-art baselines, not only by improving model consistency, but also in terms of overall model accuracy. Our code and data are available at https://github.com/sergiotasconmorales/consistency_vqa.

PDF AbstractDatasets

Introduced in the Paper:

DME VQA dataset

DME VQA dataset