From $t$-SNE to UMAP with contrastive learning

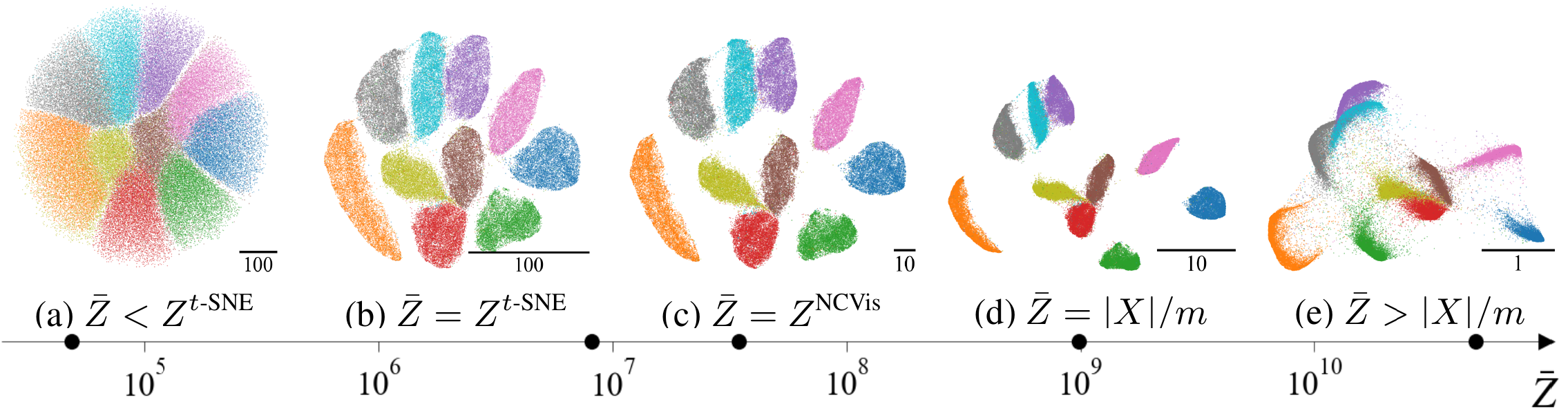

Neighbor embedding methods $t$-SNE and UMAP are the de facto standard for visualizing high-dimensional datasets. Motivated from entirely different viewpoints, their loss functions appear to be unrelated. In practice, they yield strongly differing embeddings and can suggest conflicting interpretations of the same data. The fundamental reasons for this and, more generally, the exact relationship between $t$-SNE and UMAP have remained unclear. In this work, we uncover their conceptual connection via a new insight into contrastive learning methods. Noise-contrastive estimation can be used to optimize $t$-SNE, while UMAP relies on negative sampling, another contrastive method. We find the precise relationship between these two contrastive methods and provide a mathematical characterization of the distortion introduced by negative sampling. Visually, this distortion results in UMAP generating more compact embeddings with tighter clusters compared to $t$-SNE. We exploit this new conceptual connection to propose and implement a generalization of negative sampling, allowing us to interpolate between (and even extrapolate beyond) $t$-SNE and UMAP and their respective embeddings. Moving along this spectrum of embeddings leads to a trade-off between discrete / local and continuous / global structures, mitigating the risk of over-interpreting ostensible features of any single embedding. We provide a PyTorch implementation.

PDF Abstract

CIFAR-10

CIFAR-10

MNIST

MNIST