CoSformer: Detecting Co-Salient Object with Transformers

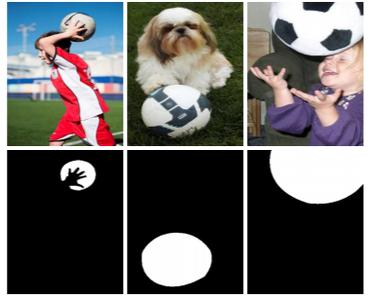

Co-Salient Object Detection (CoSOD) aims at simulating the human visual system to discover the common and salient objects from a group of relevant images. Recent methods typically develop sophisticated deep learning based models have greatly improved the performance of CoSOD task. But there are still two major drawbacks that need to be further addressed, 1) sub-optimal inter-image relationship modeling; 2) lacking consideration of inter-image separability. In this paper, we propose the Co-Salient Object Detection Transformer (CoSformer) network to capture both salient and common visual patterns from multiple images. By leveraging Transformer architecture, the proposed method address the influence of the input orders and greatly improve the stability of the CoSOD task. We also introduce a novel concept of inter-image separability. We construct a contrast learning scheme to modeling the inter-image separability and learn more discriminative embedding space to distinguish true common objects from noisy objects. Extensive experiments on three challenging benchmarks, i.e., CoCA, CoSOD3k, and Cosal2015, demonstrate that our CoSformer outperforms cutting-edge models and achieves the new state-of-the-art. We hope that CoSformer can motivate future research for more visual co-analysis tasks.

PDF Abstract

MS COCO

MS COCO

DUTS

DUTS

CoSal2015

CoSal2015