Deep Robust Clustering by Contrastive Learning

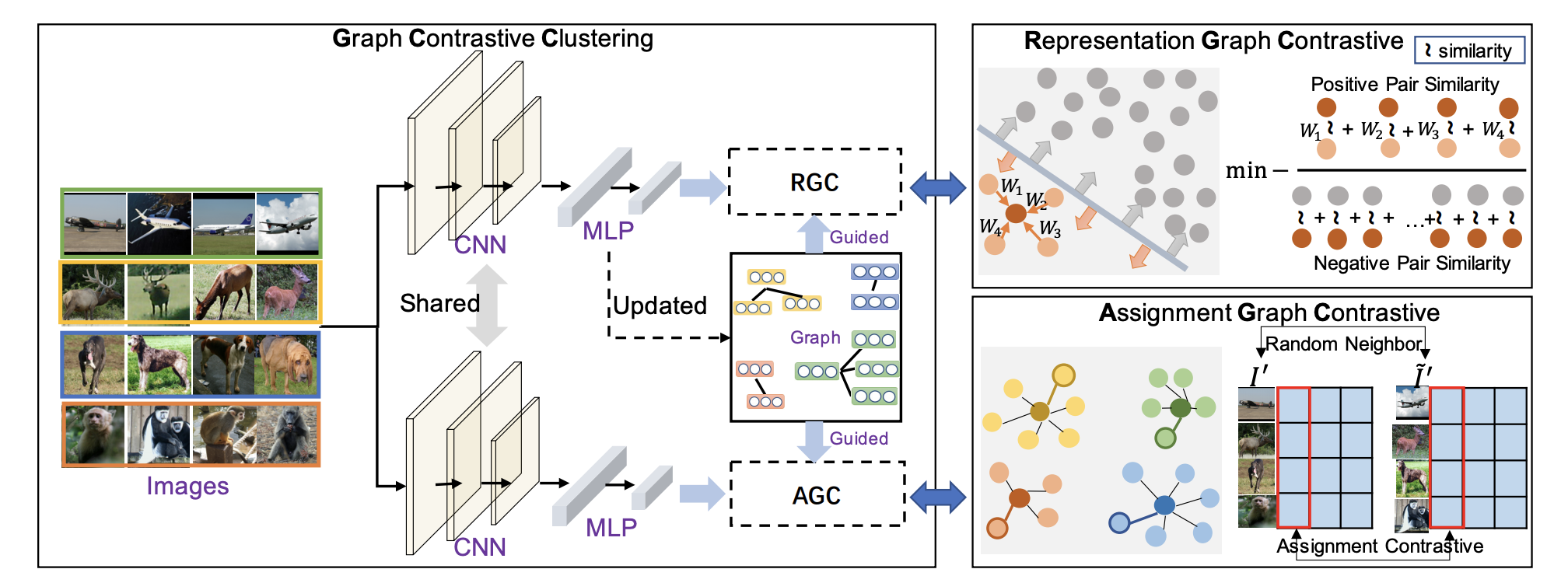

Recently, many unsupervised deep learning methods have been proposed to learn clustering with unlabelled data. By introducing data augmentation, most of the latest methods look into deep clustering from the perspective that the original image and its transformation should share similar semantic clustering assignment. However, the representation features could be quite different even they are assigned to the same cluster since softmax function is only sensitive to the maximum value. This may result in high intra-class diversities in the representation feature space, which will lead to unstable local optimal and thus harm the clustering performance. To address this drawback, we proposed Deep Robust Clustering (DRC). Different from existing methods, DRC looks into deep clustering from two perspectives of both semantic clustering assignment and representation feature, which can increase inter-class diversities and decrease intra-class diversities simultaneously. Furthermore, we summarized a general framework that can turn any maximizing mutual information into minimizing contrastive loss by investigating the internal relationship between mutual information and contrastive learning. And we successfully applied it in DRC to learn invariant features and robust clusters. Extensive experiments on six widely-adopted deep clustering benchmarks demonstrate the superiority of DRC in both stability and accuracy. e.g., attaining 71.6% mean accuracy on CIFAR-10, which is 7.1% higher than state-of-the-art results.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

STL-10

STL-10

Tiny ImageNet

Tiny ImageNet