InverSynth: Deep Estimation of Synthesizer Parameter Configurations from Audio Signals

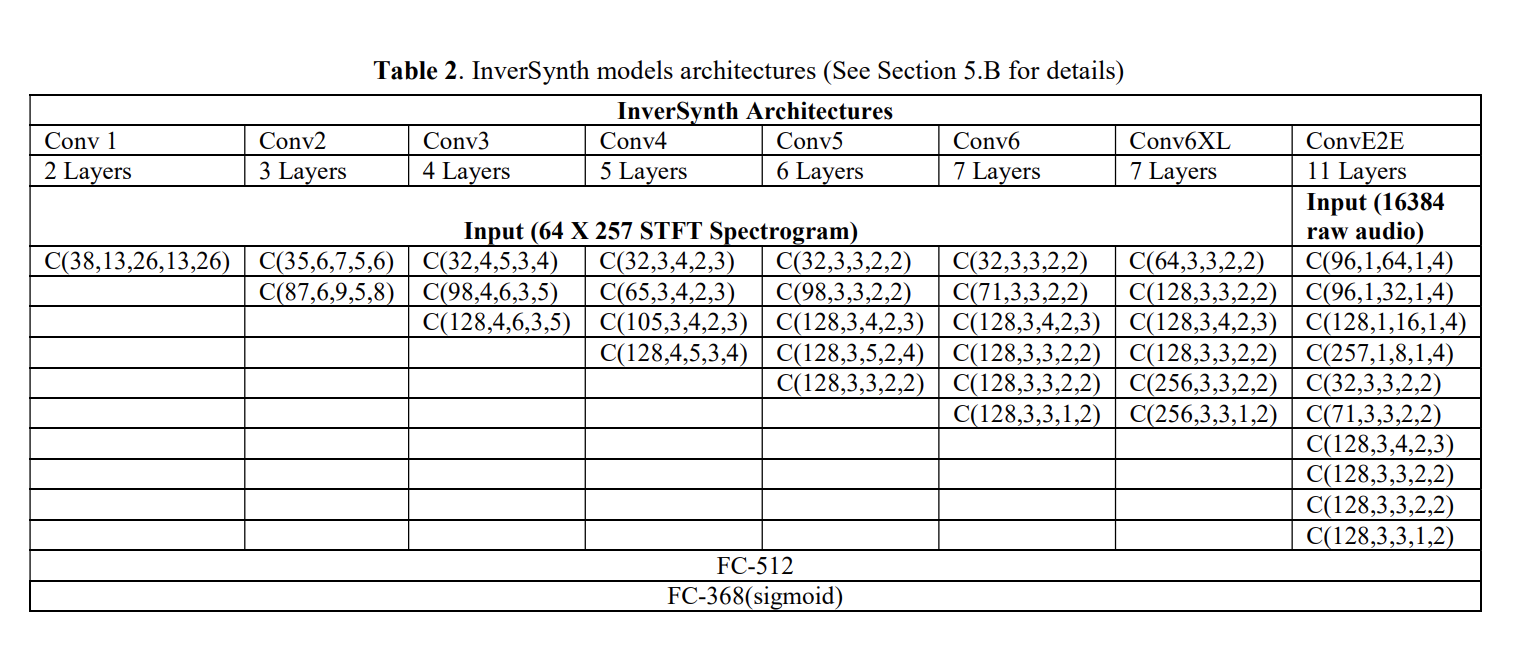

Sound synthesis is a complex field that requires domain expertise. Manual tuning of synthesizer parameters to match a specific sound can be an exhaustive task, even for experienced sound engineers. In this paper, we introduce InverSynth - an automatic method for synthesizer parameters tuning to match a given input sound. InverSynth is based on strided convolutional neural networks and is capable of inferring the synthesizer parameters configuration from the input spectrogram and even from the raw audio. The effectiveness InverSynth is demonstrated on a subtractive synthesizer with four frequency modulated oscillators, envelope generator and a gater effect. We present extensive quantitative and qualitative results that showcase the superiority InverSynth over several baselines. Furthermore, we show that the network depth is an important factor that contributes to the prediction accuracy.

PDF Abstract