Deformable 3D Convolution for Video Super-Resolution

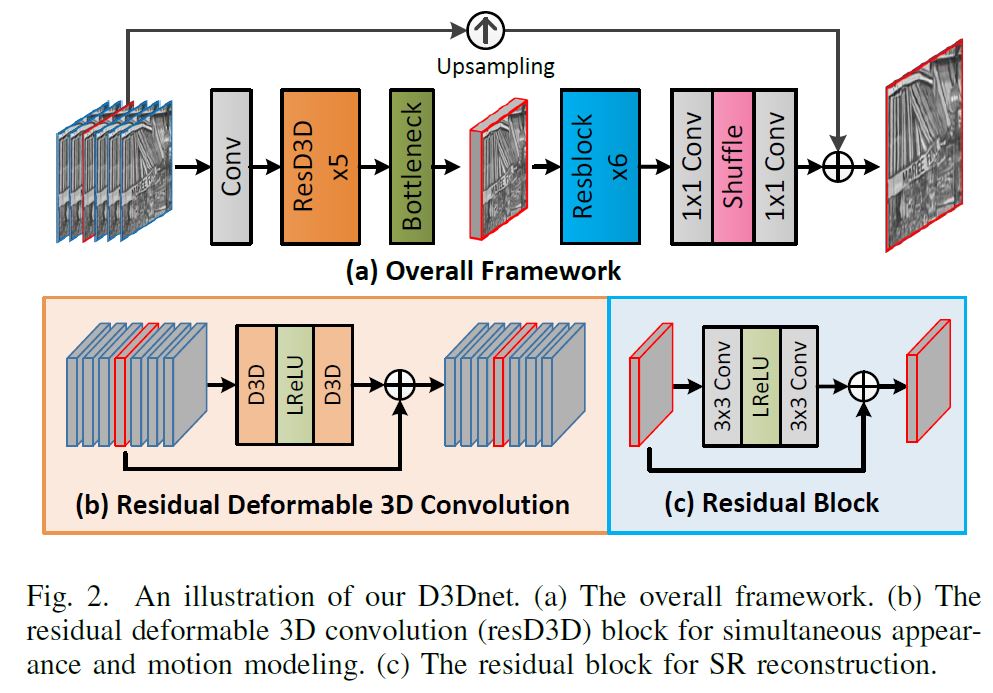

The spatio-temporal information among video sequences is significant for video super-resolution (SR). However, the spatio-temporal information cannot be fully used by existing video SR methods since spatial feature extraction and temporal motion compensation are usually performed sequentially. In this paper, we propose a deformable 3D convolution network (D3Dnet) to incorporate spatio-temporal information from both spatial and temporal dimensions for video SR. Specifically, we introduce deformable 3D convolution (D3D) to integrate deformable convolution with 3D convolution, obtaining both superior spatio-temporal modeling capability and motion-aware modeling flexibility. Extensive experiments have demonstrated the effectiveness of D3D in exploiting spatio-temporal information. Comparative results show that our network achieves state-of-the-art SR performance. Code is available at: https://github.com/XinyiYing/D3Dnet.

PDF Abstract

Vimeo90K

Vimeo90K

MSU Video Super Resolution Benchmark: Detail Restoration

MSU Video Super Resolution Benchmark: Detail Restoration