Degrade is Upgrade: Learning Degradation for Low-light Image Enhancement

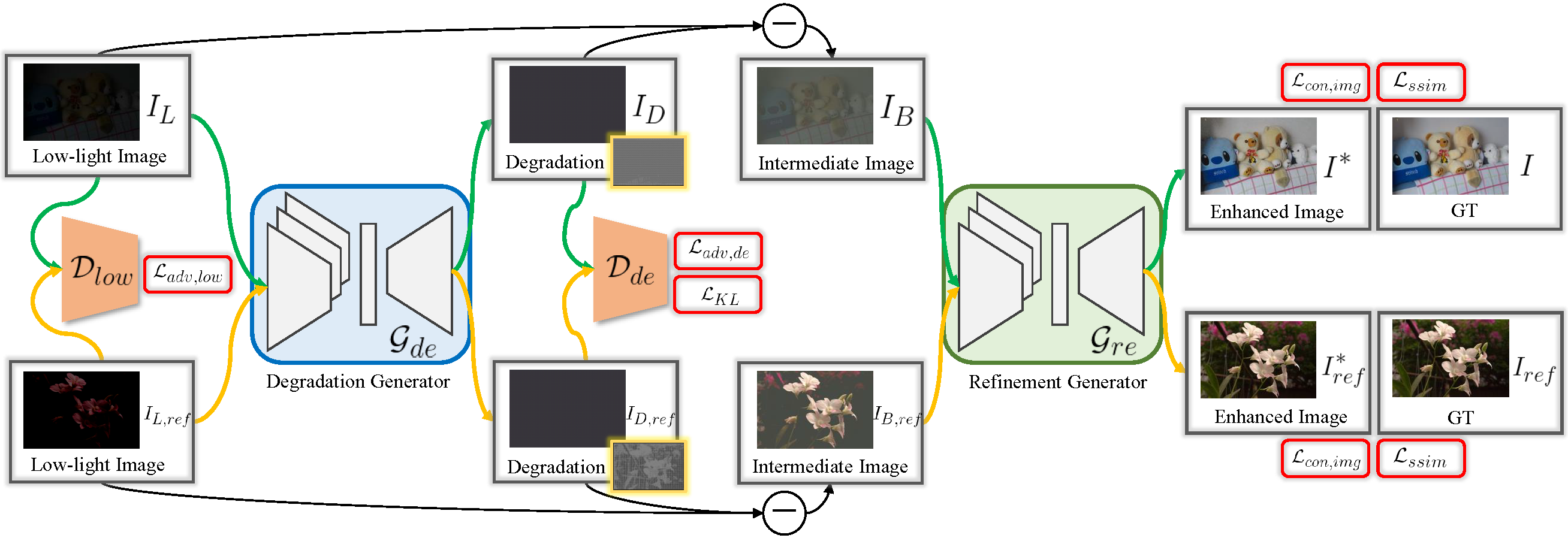

Low-light image enhancement aims to improve an image's visibility while keeping its visual naturalness. Different from existing methods tending to accomplish the relighting task directly by ignoring the fidelity and naturalness recovery, we investigate the intrinsic degradation and relight the low-light image while refining the details and color in two steps. Inspired by the color image formulation (diffuse illumination color plus environment illumination color), we first estimate the degradation from low-light inputs to simulate the distortion of environment illumination color, and then refine the content to recover the loss of diffuse illumination color. To this end, we propose a novel Degradation-to-Refinement Generation Network (DRGN). Its distinctive features can be summarized as 1) A novel two-step generation network for degradation learning and content refinement. It is not only superior to one-step methods, but also capable of synthesizing sufficient paired samples to benefit the model training; 2) A multi-resolution fusion network to represent the target information (degradation or contents) in a multi-scale cooperative manner, which is more effective to address the complex unmixing problems. Extensive experiments on both the enhancement task and joint detection task have verified the effectiveness and efficiency of our proposed method, surpassing the SOTA by \textit{0.70dB on average and 3.18\% in mAP}, respectively. The code is available at \url{https://github.com/kuijiang0802/DRGN}.

PDF Abstract

LOL

LOL