DeltaGAN: Towards Diverse Few-shot Image Generation with Sample-Specific Delta

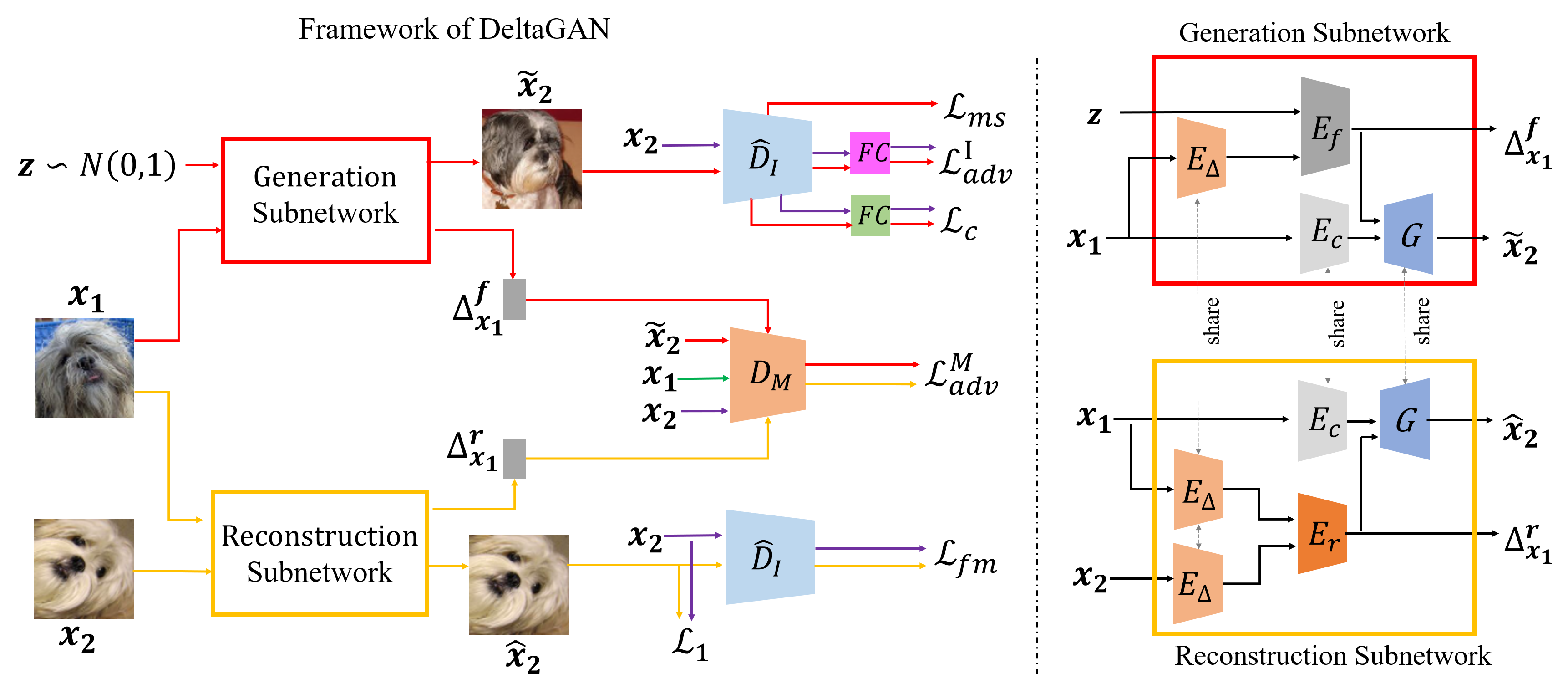

Learning to generate new images for a novel category based on only a few images, named as few-shot image generation, has attracted increasing research interest. Several state-of-the-art works have yielded impressive results, but the diversity is still limited. In this work, we propose a novel Delta Generative Adversarial Network (DeltaGAN), which consists of a reconstruction subnetwork and a generation subnetwork. The reconstruction subnetwork captures intra-category transformation, i.e., "delta", between same-category pairs. The generation subnetwork generates sample-specific "delta" for an input image, which is combined with this input image to generate a new image within the same category. Besides, an adversarial delta matching loss is designed to link the above two subnetworks together. Extensive experiments on five few-shot image datasets demonstrate the effectiveness of our proposed method.

PDF Abstract

CIFAR-100

CIFAR-100

Oxford 102 Flower

Oxford 102 Flower

EMNIST

EMNIST

NABirds

NABirds