Densely Deformable Efficient Salient Object Detection Network

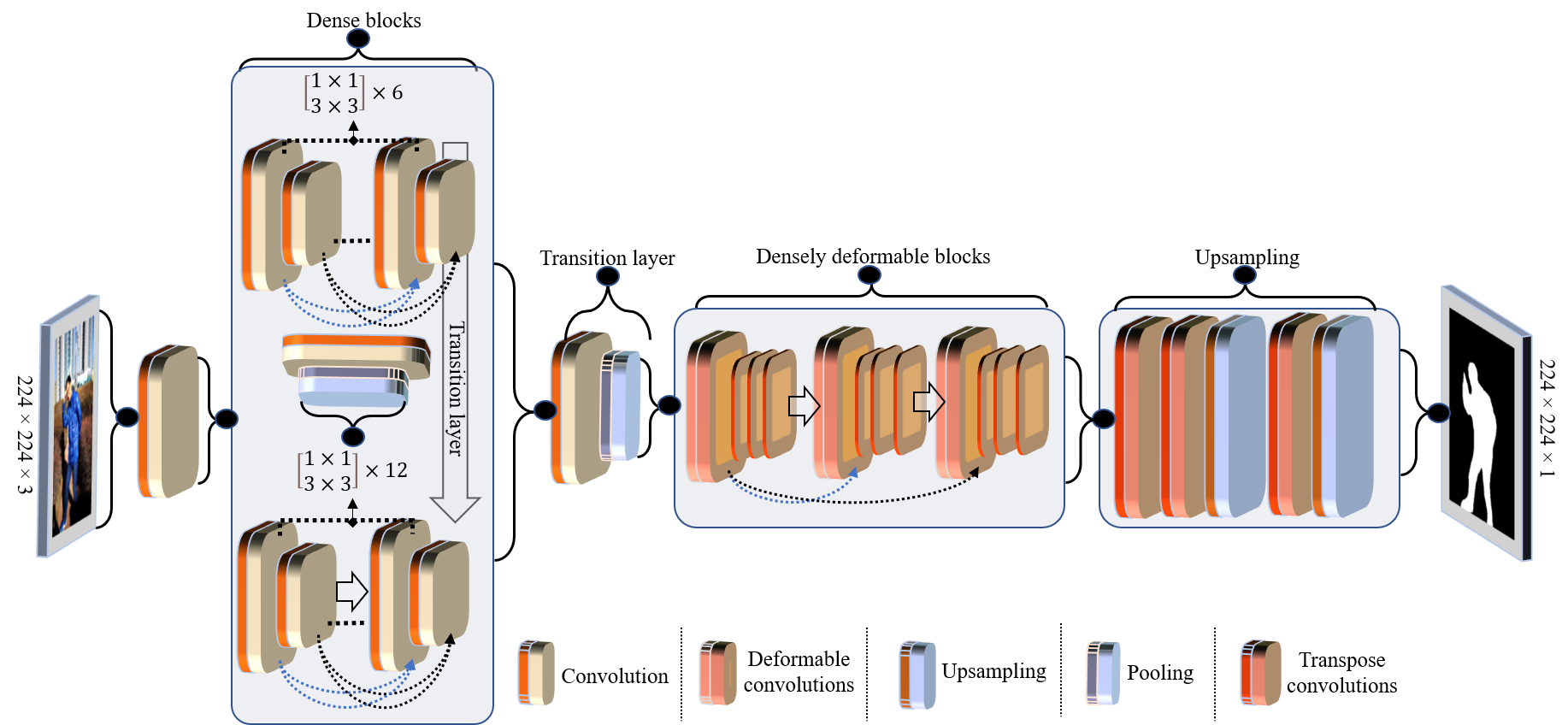

Salient Object Detection (SOD) domain using RGB-D data has lately emerged with some current models' adequately precise results. However, they have restrained generalization abilities and intensive computational complexity. In this paper, inspired by the best background/foreground separation abilities of deformable convolutions, we employ them in our Densely Deformable Network (DDNet) to achieve efficient SOD. The salient regions from densely deformable convolutions are further refined using transposed convolutions to optimally generate the saliency maps. Quantitative and qualitative evaluations using the recent SOD dataset against 22 competing techniques show our method's efficiency and effectiveness. We also offer evaluation using our own created cross-dataset, surveillance-SOD (S-SOD), to check the trained models' validity in terms of their applicability in diverse scenarios. The results indicate that the current models have limited generalization potentials, demanding further research in this direction. Our code and new dataset will be publicly available at https://github.com/tanveer-hussain/EfficientSOD

PDF AbstractResults from the Paper

Ranked #4 on

RGB-D Salient Object Detection

on SIP

(Average MAE metric, using extra

training data)

Ranked #4 on

RGB-D Salient Object Detection

on SIP

(Average MAE metric, using extra

training data)

SIP

SIP