Discrete-time Temporal Network Embedding via Implicit Hierarchical Learning in Hyperbolic Space

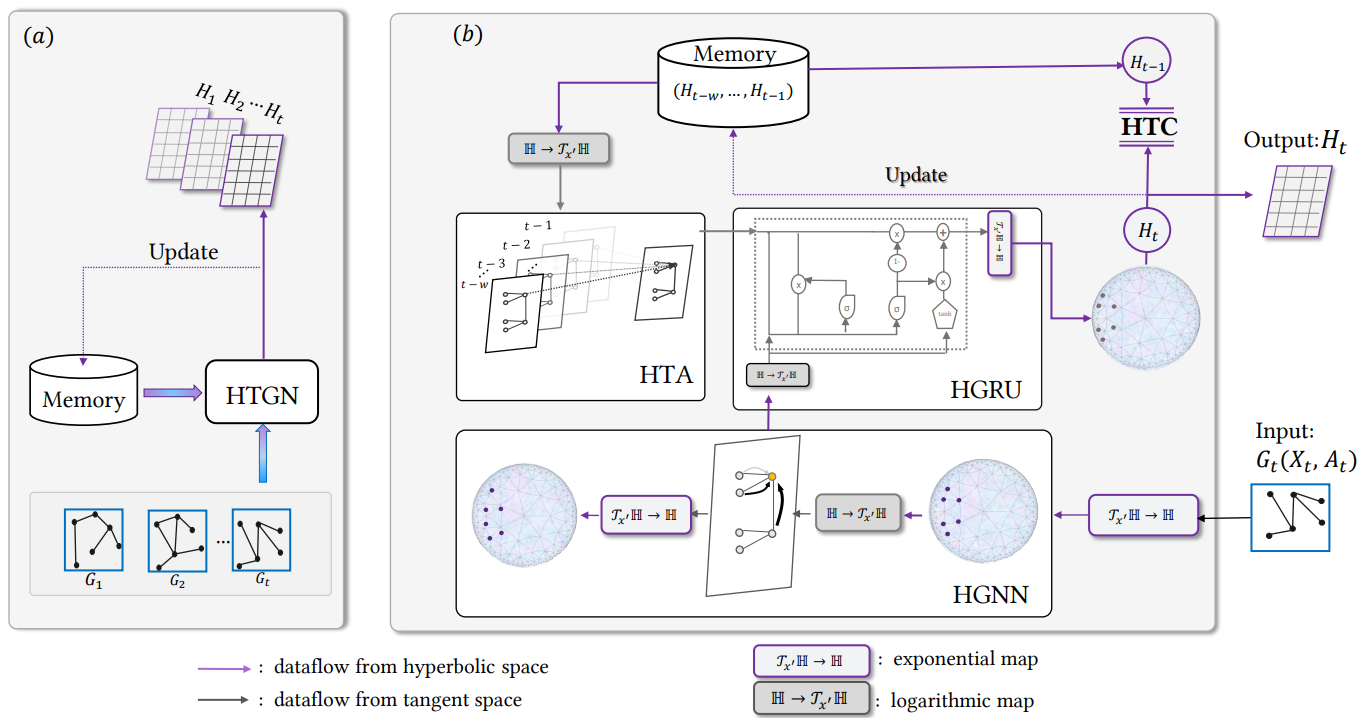

Representation learning over temporal networks has drawn considerable attention in recent years. Efforts are mainly focused on modeling structural dependencies and temporal evolving regularities in Euclidean space which, however, underestimates the inherent complex and hierarchical properties in many real-world temporal networks, leading to sub-optimal embeddings. To explore these properties of a complex temporal network, we propose a hyperbolic temporal graph network (HTGN) that fully takes advantage of the exponential capacity and hierarchical awareness of hyperbolic geometry. More specially, HTGN maps the temporal graph into hyperbolic space, and incorporates hyperbolic graph neural network and hyperbolic gated recurrent neural network, to capture the evolving behaviors and implicitly preserve hierarchical information simultaneously. Furthermore, in the hyperbolic space, we propose two important modules that enable HTGN to successfully model temporal networks: (1) hyperbolic temporal contextual self-attention (HTA) module to attend to historical states and (2) hyperbolic temporal consistency (HTC) module to ensure stability and generalization. Experimental results on multiple real-world datasets demonstrate the superiority of HTGN for temporal graph embedding, as it consistently outperforms competing methods by significant margins in various temporal link prediction tasks. Specifically, HTGN achieves AUC improvement up to 9.98% for link prediction and 11.4% for new link prediction. Moreover, the ablation study further validates the representational ability of hyperbolic geometry and the effectiveness of the proposed HTA and HTC modules.

PDF Abstract