Dual Memory Neural Computer for Asynchronous Two-view Sequential Learning

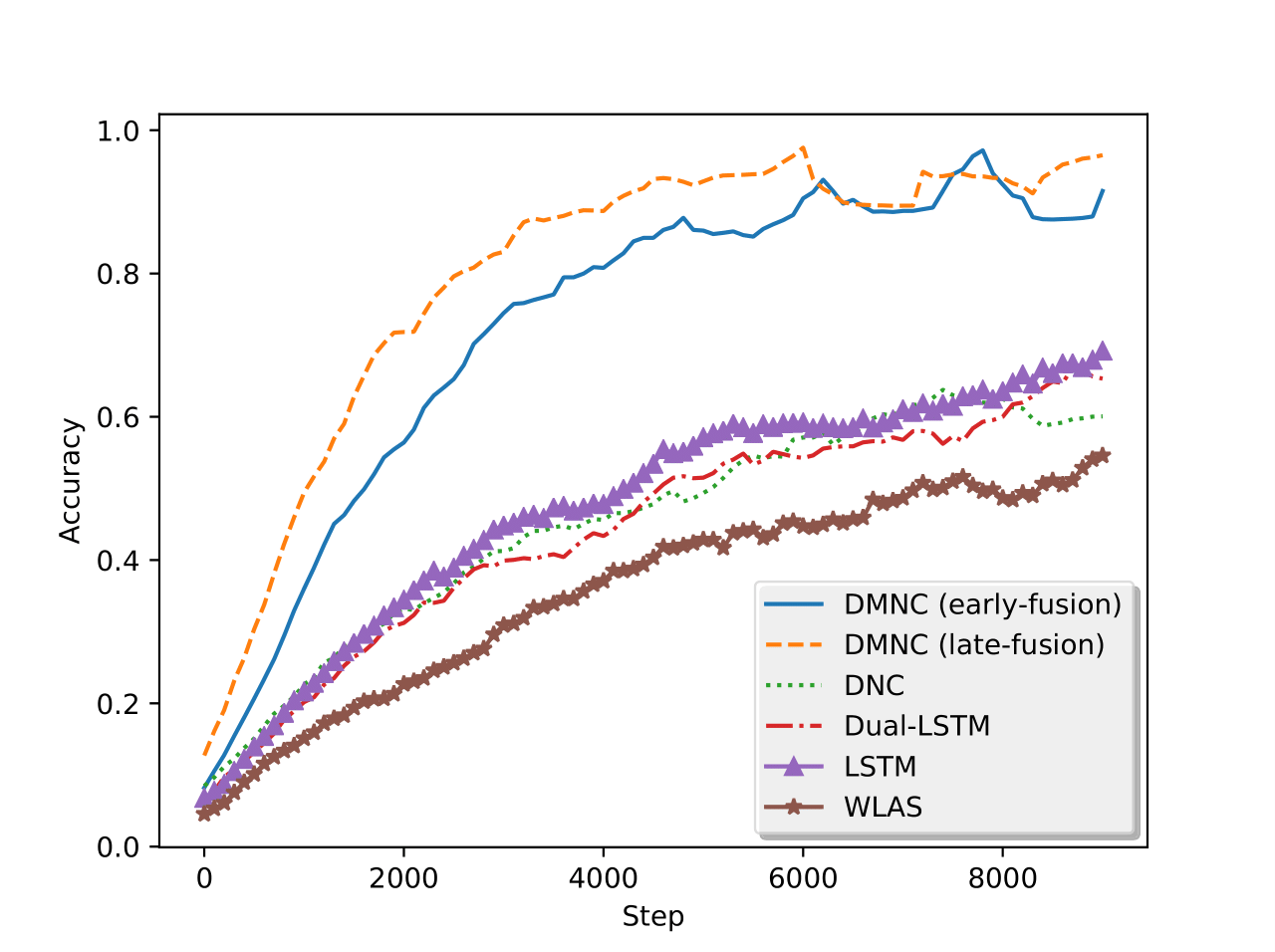

One of the core tasks in multi-view learning is to capture relations among views. For sequential data, the relations not only span across views, but also extend throughout the view length to form long-term intra-view and inter-view interactions. In this paper, we present a new memory augmented neural network model that aims to model these complex interactions between two asynchronous sequential views. Our model uses two encoders for reading from and writing to two external memories for encoding input views. The intra-view interactions and the long-term dependencies are captured by the use of memories during this encoding process. There are two modes of memory accessing in our system: late-fusion and early-fusion, corresponding to late and early inter-view interactions. In the late-fusion mode, the two memories are separated, containing only view-specific contents. In the early-fusion mode, the two memories share the same addressing space, allowing cross-memory accessing. In both cases, the knowledge from the memories will be combined by a decoder to make predictions over the output space. The resulting dual memory neural computer is demonstrated on a comprehensive set of experiments, including a synthetic task of summing two sequences and the tasks of drug prescription and disease progression in healthcare. The results demonstrate competitive performance over both traditional algorithms and deep learning methods designed for multi-view problems.

PDF Abstract

MIMIC-III

MIMIC-III