DualPoseNet: Category-level 6D Object Pose and Size Estimation Using Dual Pose Network with Refined Learning of Pose Consistency

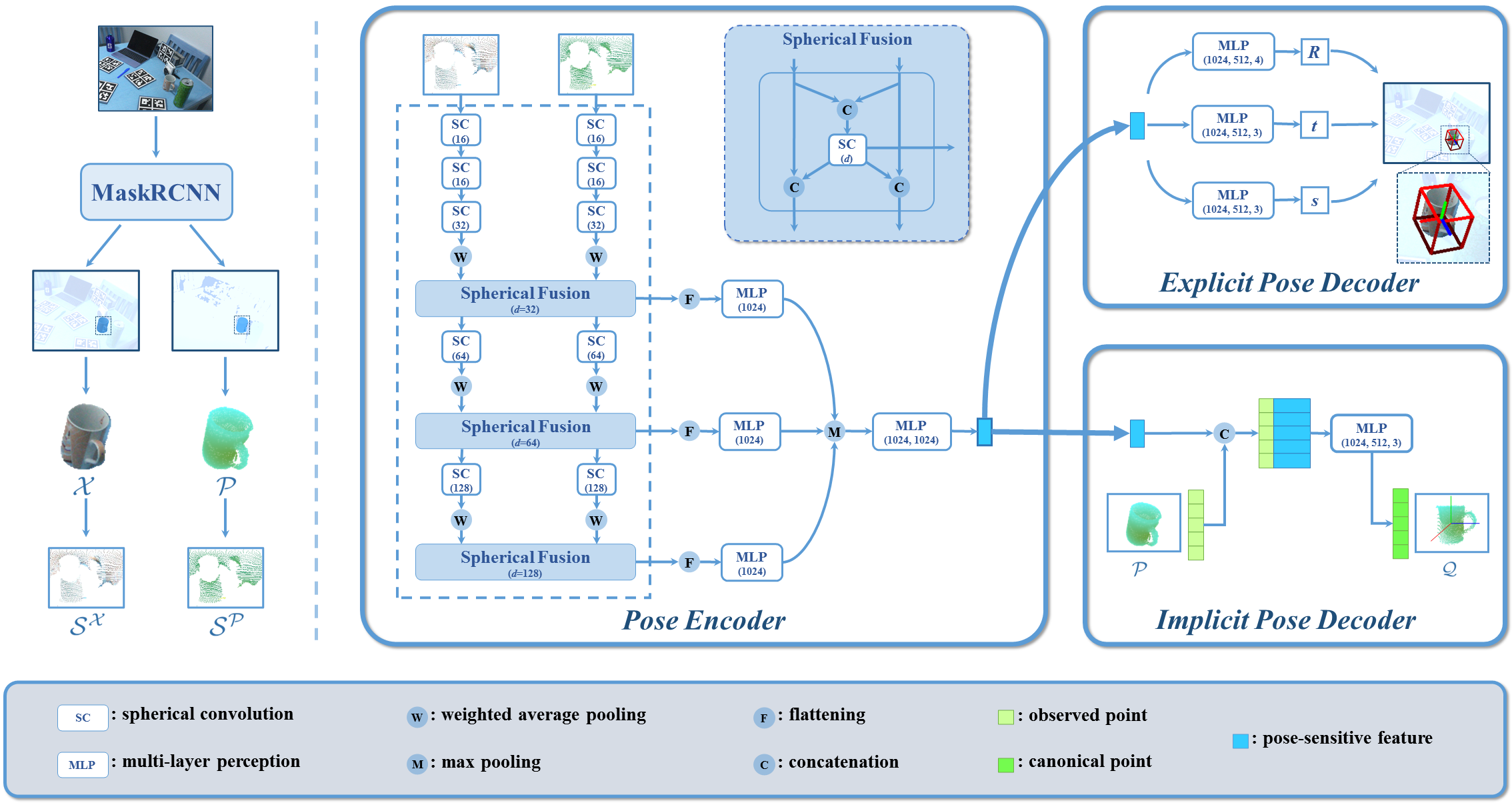

Category-level 6D object pose and size estimation is to predict full pose configurations of rotation, translation, and size for object instances observed in single, arbitrary views of cluttered scenes. In this paper, we propose a new method of Dual Pose Network with refined learning of pose consistency for this task, shortened as DualPoseNet. DualPoseNet stacks two parallel pose decoders on top of a shared pose encoder, where the implicit decoder predicts object poses with a working mechanism different from that of the explicit one; they thus impose complementary supervision on the training of pose encoder. We construct the encoder based on spherical convolutions, and design a module of Spherical Fusion wherein for a better embedding of pose-sensitive features from the appearance and shape observations. Given no testing CAD models, it is the novel introduction of the implicit decoder that enables the refined pose prediction during testing, by enforcing the predicted pose consistency between the two decoders using a self-adaptive loss term. Thorough experiments on benchmarks of both category- and instance-level object pose datasets confirm efficacy of our designs. DualPoseNet outperforms existing methods with a large margin in the regime of high precision. Our code is released publicly at https://github.com/Gorilla-Lab-SCUT/DualPoseNet.

PDF Abstract ICCV 2021 PDF ICCV 2021 Abstract

SUN RGB-D

SUN RGB-D

YCB-Video

YCB-Video