Dynamic Head: Unifying Object Detection Heads with Attentions

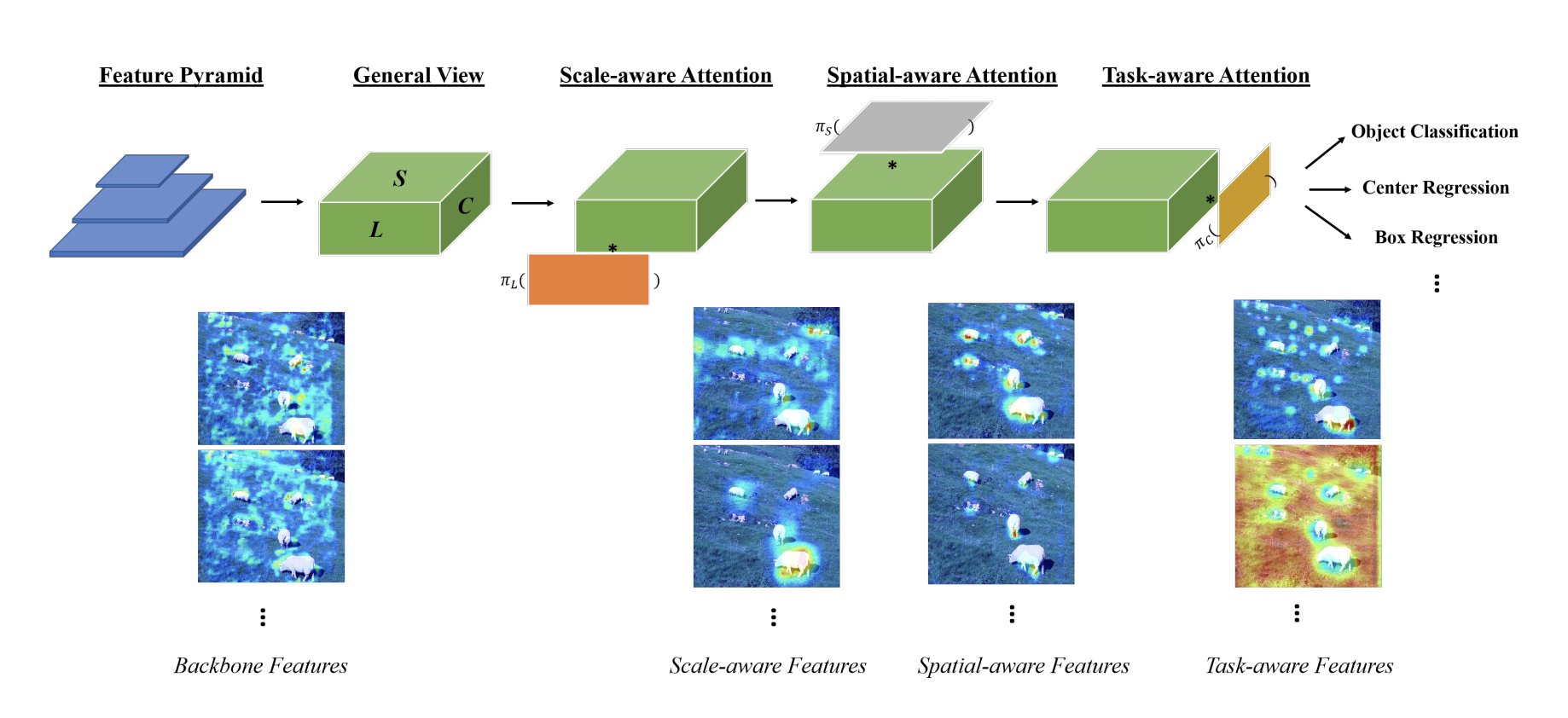

The complex nature of combining localization and classification in object detection has resulted in the flourished development of methods. Previous works tried to improve the performance in various object detection heads but failed to present a unified view. In this paper, we present a novel dynamic head framework to unify object detection heads with attentions. By coherently combining multiple self-attention mechanisms between feature levels for scale-awareness, among spatial locations for spatial-awareness, and within output channels for task-awareness, the proposed approach significantly improves the representation ability of object detection heads without any computational overhead. Further experiments demonstrate that the effectiveness and efficiency of the proposed dynamic head on the COCO benchmark. With a standard ResNeXt-101-DCN backbone, we largely improve the performance over popular object detectors and achieve a new state-of-the-art at 54.0 AP. Furthermore, with latest transformer backbone and extra data, we can push current best COCO result to a new record at 60.6 AP. The code will be released at https://github.com/microsoft/DynamicHead.

PDF Abstract CVPR 2021 PDF CVPR 2021 AbstractCode

Results from the Paper

Ranked #3 on

Object Detection

on COCO 2017 val

(AP75 metric)

Ranked #3 on

Object Detection

on COCO 2017 val

(AP75 metric)

MS COCO

MS COCO

COCO-O

COCO-O