E-LPIPS: Robust Perceptual Image Similarity via Random Transformation Ensembles

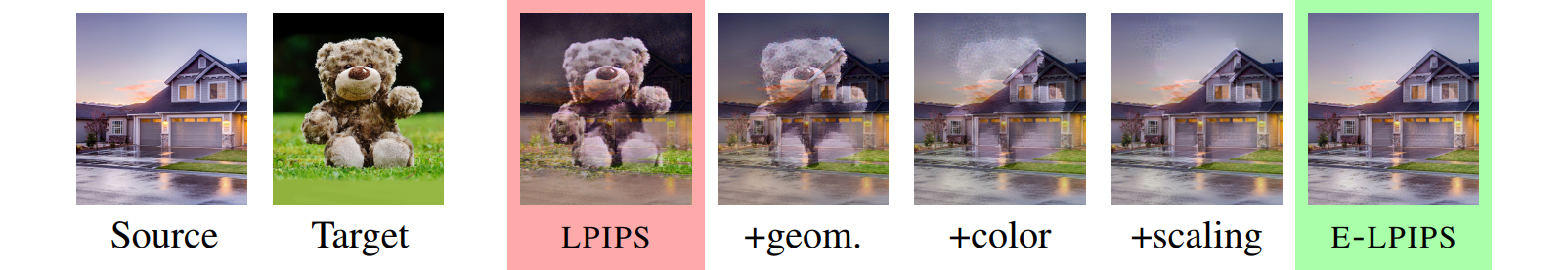

It has been recently shown that the hidden variables of convolutional neural networks make for an efficient perceptual similarity metric that accurately predicts human judgment on relative image similarity assessment. First, we show that such learned perceptual similarity metrics (LPIPS) are susceptible to adversarial attacks that dramatically contradict human visual similarity judgment. While this is not surprising in light of neural networks' well-known weakness to adversarial perturbations, we proceed to show that self-ensembling with an infinite family of random transformations of the input --- a technique known not to render classification networks robust --- is enough to turn the metric robust against attack, while retaining predictive power on human judgments. Finally, we study the geometry imposed by our our novel self-ensembled metric (E-LPIPS) on the space of natural images. We find evidence of "perceptual convexity" by showing that convex combinations of similar-looking images retain appearance, and that discrete geodesics yield meaningful frame interpolation and texture morphing, all without explicit correspondences.

PDF Abstract

Perceptual Similarity

Perceptual Similarity