Efficient Self-Ensemble for Semantic Segmentation

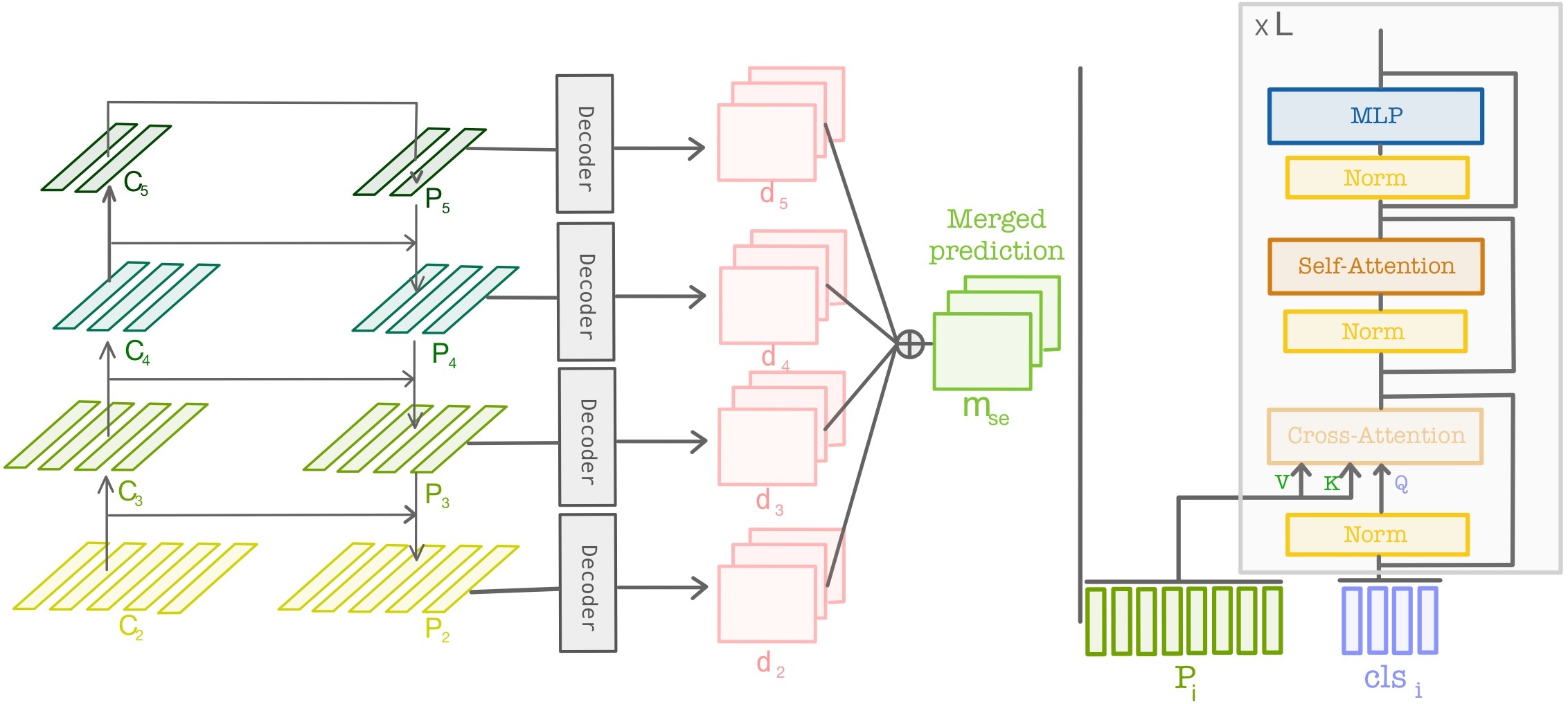

Ensemble of predictions is known to perform better than individual predictions taken separately. However, for tasks that require heavy computational resources, e.g. semantic segmentation, creating an ensemble of learners that needs to be trained separately is hardly tractable. In this work, we propose to leverage the performance boost offered by ensemble methods to enhance the semantic segmentation, while avoiding the traditional heavy training cost of the ensemble. Our self-ensemble approach takes advantage of the multi-scale features set produced by feature pyramid network methods to feed independent decoders, thus creating an ensemble within a single model. Similar to the ensemble, the final prediction is the aggregation of the prediction made by each learner. In contrast to previous works, our model can be trained end-to-end, alleviating the traditional cumbersome multi-stage training of ensembles. Our self-ensemble approach outperforms the current state-of-the-art on the benchmark datasets Pascal Context and COCO-Stuff-10K for semantic segmentation and is competitive on ADE20K and Cityscapes. Code is publicly available at github.com/WalBouss/SenFormer.

PDF Abstract arXiv 2021 PDF arXiv 2021 Abstract

Cityscapes

Cityscapes

ADE20K

ADE20K

PASCAL Context

PASCAL Context

COCO-Stuff

COCO-Stuff