Ego2Hands: A Dataset for Egocentric Two-hand Segmentation and Detection

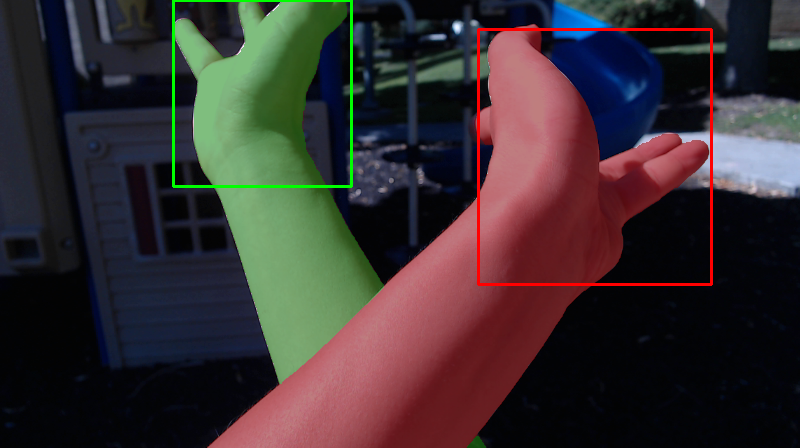

Hand segmentation and detection in truly unconstrained RGB-based settings is important for many applications. However, existing datasets are far from sufficient both in terms of size and variety due to the infeasibility of manual annotation of large amounts of segmentation and detection data. As a result, current methods are limited by many underlying assumptions such as constrained environment, consistent skin color and lighting. In this work, we present a large-scale RGB-based egocentric hand segmentation/detection dataset Ego2Hands that is automatically annotated and a color-invariant compositing-based data generation technique capable of creating unlimited training data with variety. For quantitative analysis, we manually annotated an evaluation set that significantly exceeds existing benchmarks in quantity, diversity and annotation accuracy. We provide cross-dataset evaluation as well as thorough analysis on the performance of state-of-the-art models on Ego2Hands to show that our dataset and data generation technique can produce models that generalize to unseen environments without domain adaptation.

PDF Abstract

GTEA

GTEA

EGTEA

EGTEA

EgoHands

EgoHands