EnAET: A Self-Trained framework for Semi-Supervised and Supervised Learning with Ensemble Transformations

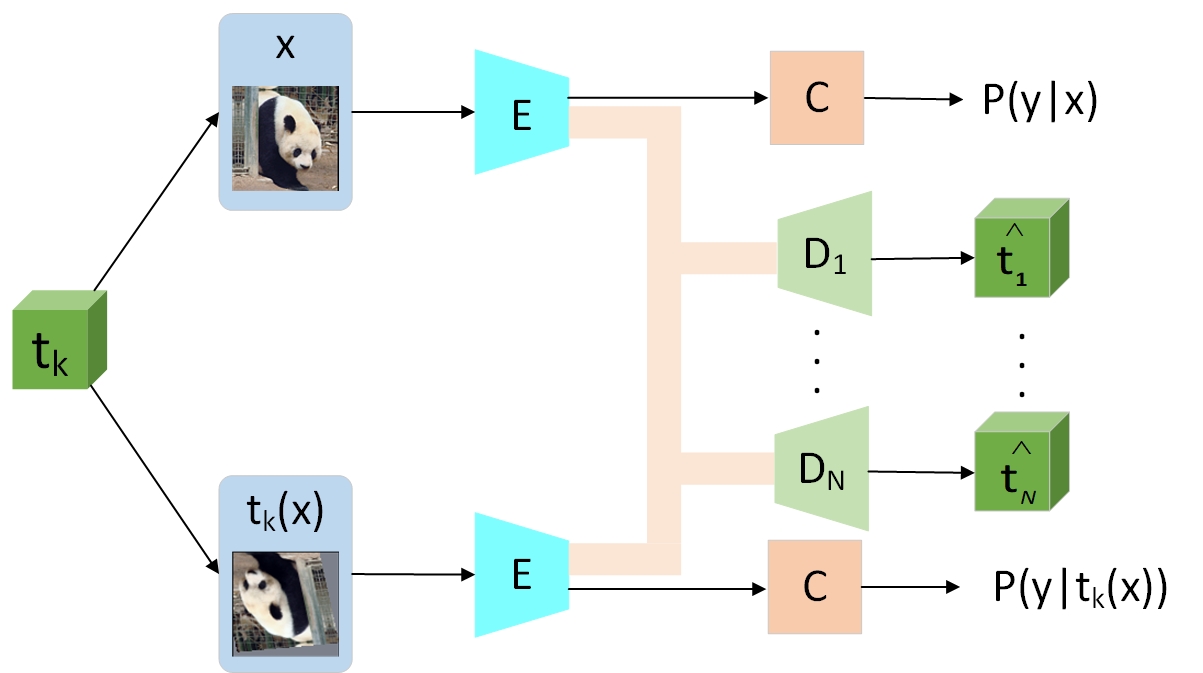

Deep neural networks have been successfully applied to many real-world applications. However, such successes rely heavily on large amounts of labeled data that is expensive to obtain. Recently, many methods for semi-supervised learning have been proposed and achieved excellent performance. In this study, we propose a new EnAET framework to further improve existing semi-supervised methods with self-supervised information. To our best knowledge, all current semi-supervised methods improve performance with prediction consistency and confidence ideas. We are the first to explore the role of {\bf self-supervised} representations in {\bf semi-supervised} learning under a rich family of transformations. Consequently, our framework can integrate the self-supervised information as a regularization term to further improve {\it all} current semi-supervised methods. In the experiments, we use MixMatch, which is the current state-of-the-art method on semi-supervised learning, as a baseline to test the proposed EnAET framework. Across different datasets, we adopt the same hyper-parameters, which greatly improves the generalization ability of the EnAET framework. Experiment results on different datasets demonstrate that the proposed EnAET framework greatly improves the performance of current semi-supervised algorithms. Moreover, this framework can also improve {\bf supervised learning} by a large margin, including the extremely challenging scenarios with only 10 images per class. The code and experiment records are available in \url{https://github.com/maple-research-lab/EnAET}.

PDF Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

SVHN

SVHN

STL-10

STL-10