End-to-end One-shot Human Parsing

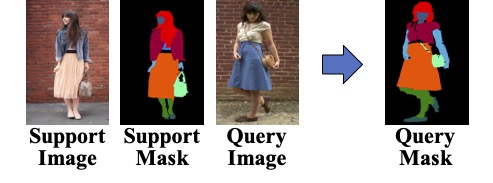

Previous human parsing methods are limited to parsing humans into pre-defined classes, which is inflexible for practical fashion applications that often have new fashion item classes. In this paper, we define a novel one-shot human parsing (OSHP) task that requires parsing humans into an open set of classes defined by any test example. During training, only base classes are exposed, which only overlap with part of the test-time classes. To address three main challenges in OSHP, i.e., small sizes, testing bias, and similar parts, we devise an End-to-end One-shot human Parsing Network (EOP-Net). Firstly, an end-to-end human parsing framework is proposed to parse the query image into both coarse-grained and fine-grained human classes, which embeds rich semantic information that is shared across different granularities to identify the small-sized human classes. Then, we gradually smooth the training-time static prototypes to get robust class representations. Moreover, we employ a dynamic objective to encourage the network's enhancing features' representational capability in the early training phase while improving features' transferability in the late training phase. Therefore, our method can quickly adapt to the novel classes and mitigate the testing bias issue. In addition, we add a contrastive loss at the prototype level to enforce inter-class distances, thereby discriminating the similar parts. For comprehensive evaluations on the new task, we tailor three existing popular human parsing benchmarks to the OSHP task. Experiments demonstrate that EOP-Net outperforms representative one-shot segmentation models by large margins and serves as a strong baseline for further research. The source code is available at https://github.com/Charleshhy/One-shot-Human-Parsing.

PDF Abstract

LIP

LIP