Entity Linking in 100 Languages

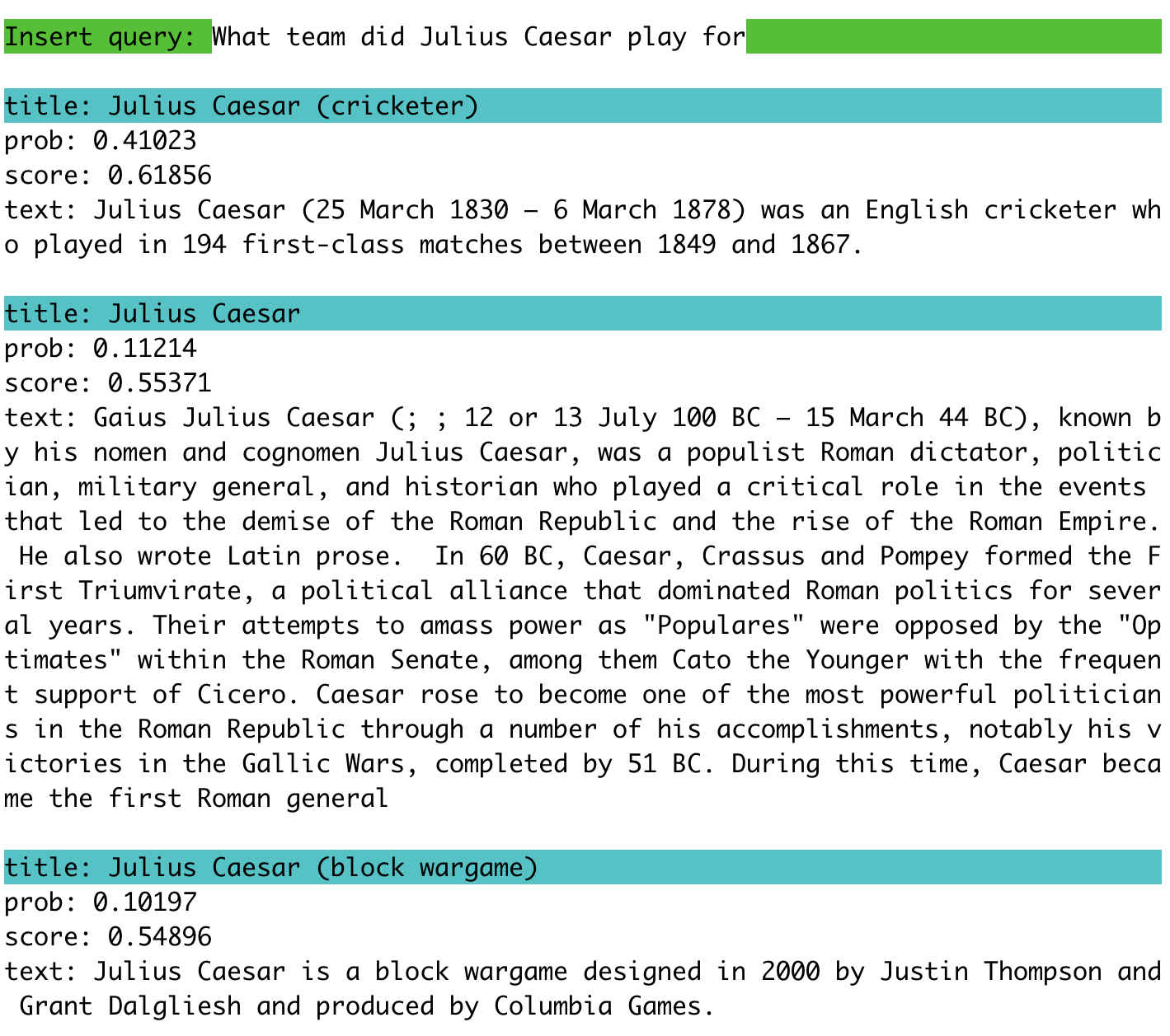

We propose a new formulation for multilingual entity linking, where language-specific mentions resolve to a language-agnostic Knowledge Base. We train a dual encoder in this new setting, building on prior work with improved feature representation, negative mining, and an auxiliary entity-pairing task, to obtain a single entity retrieval model that covers 100+ languages and 20 million entities. The model outperforms state-of-the-art results from a far more limited cross-lingual linking task. Rare entities and low-resource languages pose challenges at this large-scale, so we advocate for an increased focus on zero- and few-shot evaluation. To this end, we provide Mewsli-9, a large new multilingual dataset (http://goo.gle/mewsli-dataset) matched to our setting, and show how frequency-based analysis provided key insights for our model and training enhancements.

PDF Abstract EMNLP 2020 PDF EMNLP 2020 AbstractDatasets

Introduced in the Paper:

Mewsli-9Results from the Paper

Ranked #1 on

Entity Disambiguation

on Mewsli-9

(using extra training data)

Ranked #1 on

Entity Disambiguation

on Mewsli-9

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Entity Disambiguation | Mewsli-9 | Model F+ | Micro Precision | 89.0 | # 1 |