Evaluating natural language processing models with generalization metrics that do not need access to any training or testing data

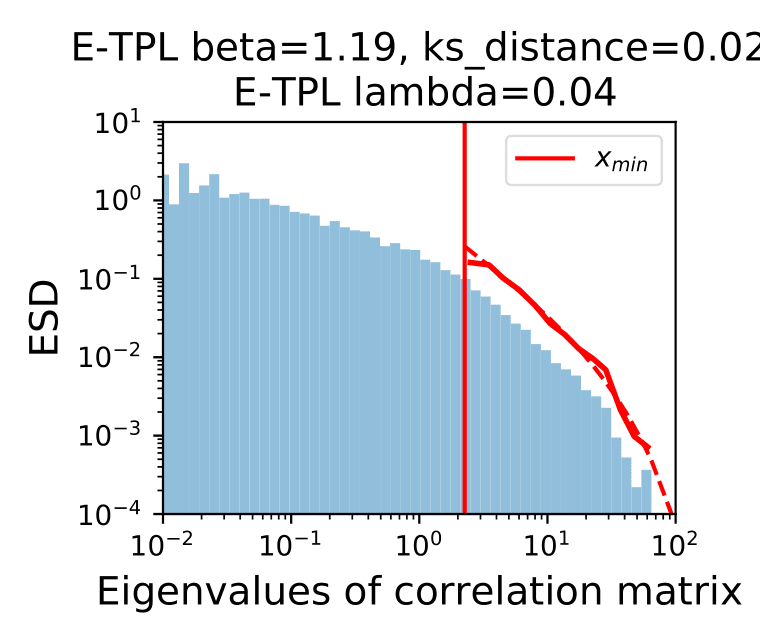

Selecting suitable architecture parameters and training hyperparameters is essential for enhancing machine learning (ML) model performance. Several recent empirical studies conduct large-scale correlational analysis on neural networks (NNs) to search for effective \emph{generalization metrics} that can guide this type of model selection. Effective metrics are typically expected to correlate strongly with test performance. In this paper, we expand on prior analyses by examining generalization-metric-based model selection with the following objectives: (i) focusing on natural language processing (NLP) tasks, as prior work primarily concentrates on computer vision (CV) tasks; (ii) considering metrics that directly predict \emph{test error} instead of the \emph{generalization gap}; (iii) exploring metrics that do not need access to data to compute. From these objectives, we are able to provide the first model selection results on large pretrained Transformers from Huggingface using generalization metrics. Our analyses consider (I) hundreds of Transformers trained in different settings, in which we systematically vary the amount of data, the model size and the optimization hyperparameters, (II) a total of 51 pretrained Transformers from eight families of Huggingface NLP models, including GPT2, BERT, etc., and (III) a total of 28 existing and novel generalization metrics. Despite their niche status, we find that metrics derived from the heavy-tail (HT) perspective are particularly useful in NLP tasks, exhibiting stronger correlations than other, more popular metrics. To further examine these metrics, we extend prior formulations relying on power law (PL) spectral distributions to exponential (EXP) and exponentially-truncated power law (E-TPL) families.

PDF Abstract

MultiNLI

MultiNLI

WikiText-2

WikiText-2

WikiText-103

WikiText-103

WMT 2014

WMT 2014