ExKMC: Expanding Explainable $k$-Means Clustering

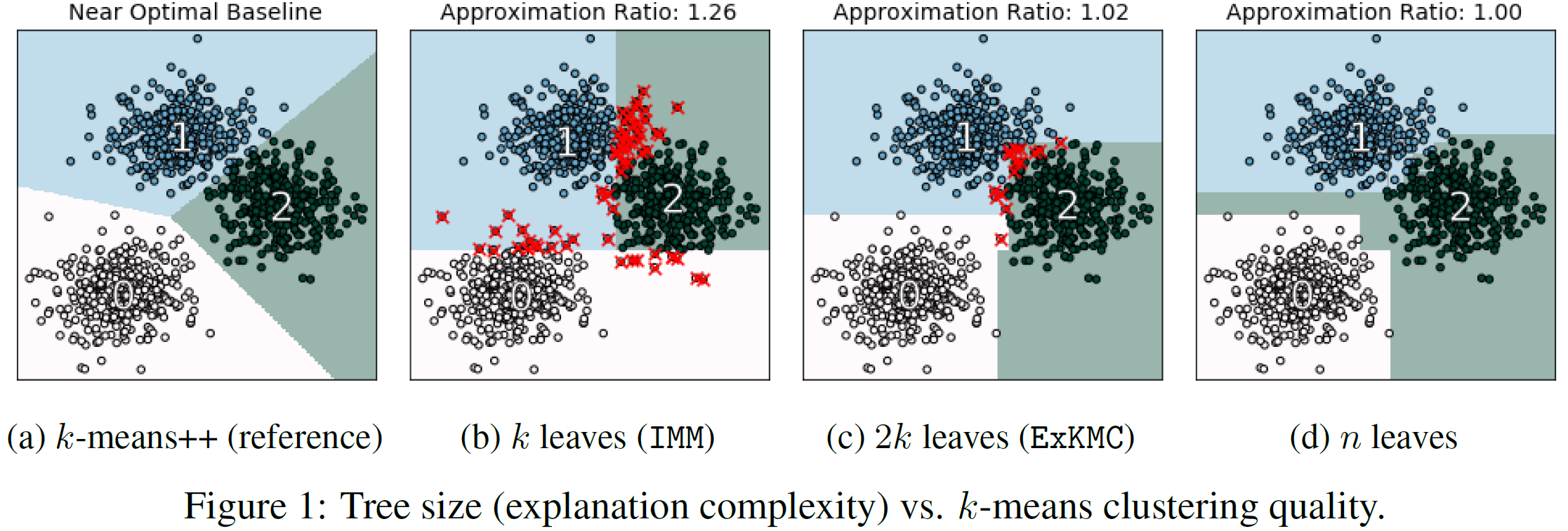

Despite the popularity of explainable AI, there is limited work on effective methods for unsupervised learning. We study algorithms for $k$-means clustering, focusing on a trade-off between explainability and accuracy. Following prior work, we use a small decision tree to partition a dataset into $k$ clusters. This enables us to explain each cluster assignment by a short sequence of single-feature thresholds. While larger trees produce more accurate clusterings, they also require more complex explanations. To allow flexibility, we develop a new explainable $k$-means clustering algorithm, ExKMC, that takes an additional parameter $k' \geq k$ and outputs a decision tree with $k'$ leaves. We use a new surrogate cost to efficiently expand the tree and to label the leaves with one of $k$ clusters. We prove that as $k'$ increases, the surrogate cost is non-increasing, and hence, we trade explainability for accuracy. Empirically, we validate that ExKMC produces a low cost clustering, outperforming both standard decision tree methods and other algorithms for explainable clustering. Implementation of ExKMC available at https://github.com/navefr/ExKMC.

PDF Abstract

CIFAR-10

CIFAR-10