Explainability Techniques for Graph Convolutional Networks

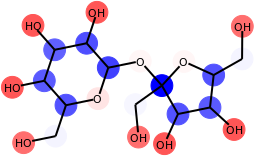

Graph Networks are used to make decisions in potentially complex scenarios but it is usually not obvious how or why they made them. In this work, we study the explainability of Graph Network decisions using two main classes of techniques, gradient-based and decomposition-based, on a toy dataset and a chemistry task. Our study sets the ground for future development as well as application to real-world problems.

PDF AbstractTasks

Datasets

Add Datasets

introduced or used in this paper

Results from the Paper

Submit

results from this paper

to get state-of-the-art GitHub badges and help the

community compare results to other papers.

Methods

No methods listed for this paper. Add

relevant methods here