Exploring DeshuffleGANs in Self-Supervised Generative Adversarial Networks

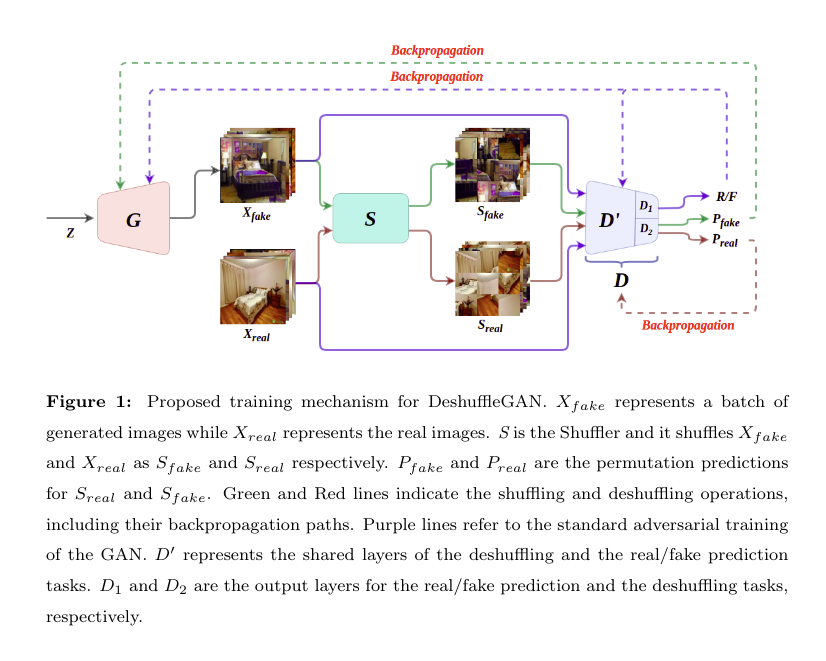

Generative Adversarial Networks (GANs) have become the most used networks towards solving the problem of image generation. Self-supervised GANs are later proposed to avoid the catastrophic forgetting of the discriminator and to improve the image generation quality without needing the class labels. However, the generalizability of the self-supervision tasks on different GAN architectures is not studied before. To that end, we extensively analyze the contribution of a previously proposed self-supervision task, deshuffling of the DeshuffleGANs in the generalizability context. We assign the deshuffling task to two different GAN discriminators and study the effects of the task on both architectures. We extend the evaluations compared to the previously proposed DeshuffleGANs on various datasets. We show that the DeshuffleGAN obtains the best FID results for several datasets compared to the other self-supervised GANs. Furthermore, we compare the deshuffling with the rotation prediction that is firstly deployed to the GAN training and demonstrate that its contribution exceeds the rotation prediction. We design the conditional DeshuffleGAN called cDeshuffleGAN to evaluate the quality of the learnt representations. Lastly, we show the contribution of the self-supervision tasks to the GAN training on the loss landscape and present that the effects of these tasks may not be cooperative to the adversarial training in some settings. Our code can be found at https://github.com/gulcinbaykal/DeshuffleGAN.

PDF Abstract

CelebA-HQ

CelebA-HQ