Extreme Relative Pose Network under Hybrid Representations

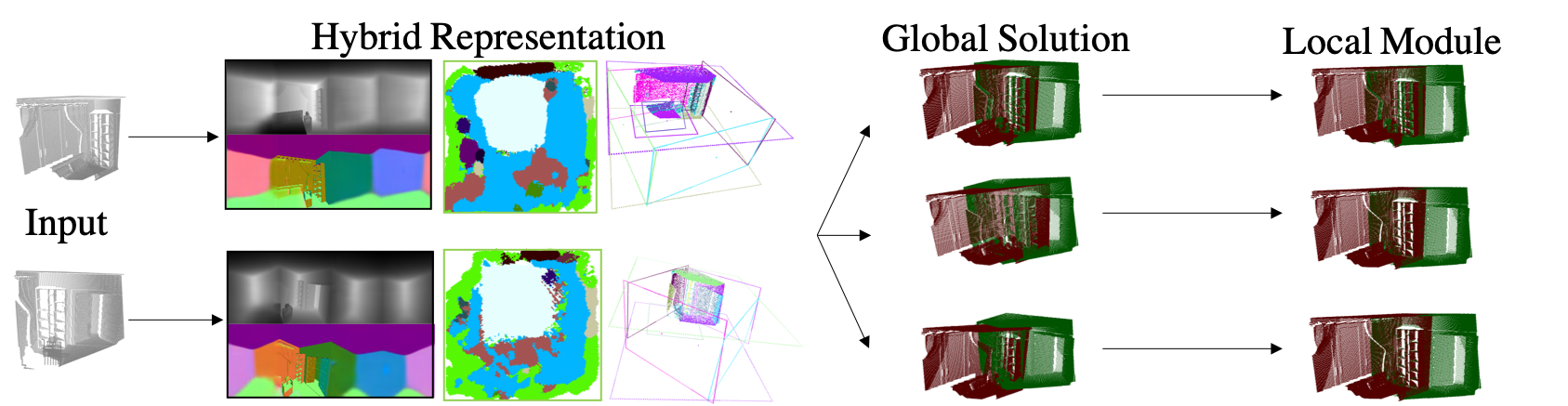

In this paper, we introduce a novel RGB-D based relative pose estimation approach that is suitable for small-overlapping or non-overlapping scans and can output multiple relative poses. Our method performs scene completion and matches the completed scans. However, instead of using a fixed representation for completion, the key idea is to utilize hybrid representations that combine 360-image, 2D image-based layout, and planar patches. This approach offers adaptively feature representations for relative pose estimation. Besides, we introduce a global-2-local matching procedure, which utilizes initial relative poses obtained during the global phase to detect and then integrate geometric relations for pose refinement. Experimental results justify the potential of this approach across a wide range of benchmark datasets. For example, on ScanNet, the rotation translation errors of the top-1/top-5 predictions of our approach are 28.6/0.90m and 16.8/0.76m, respectively. Our approach also considerably boosts the performance of multi-scan reconstruction in few-view reconstruction settings.

PDF Abstract CVPR 2020 PDF CVPR 2020 Abstract

ScanNet

ScanNet

SUNCG

SUNCG