Facing the Void: Overcoming Missing Data in Multi-View Imagery

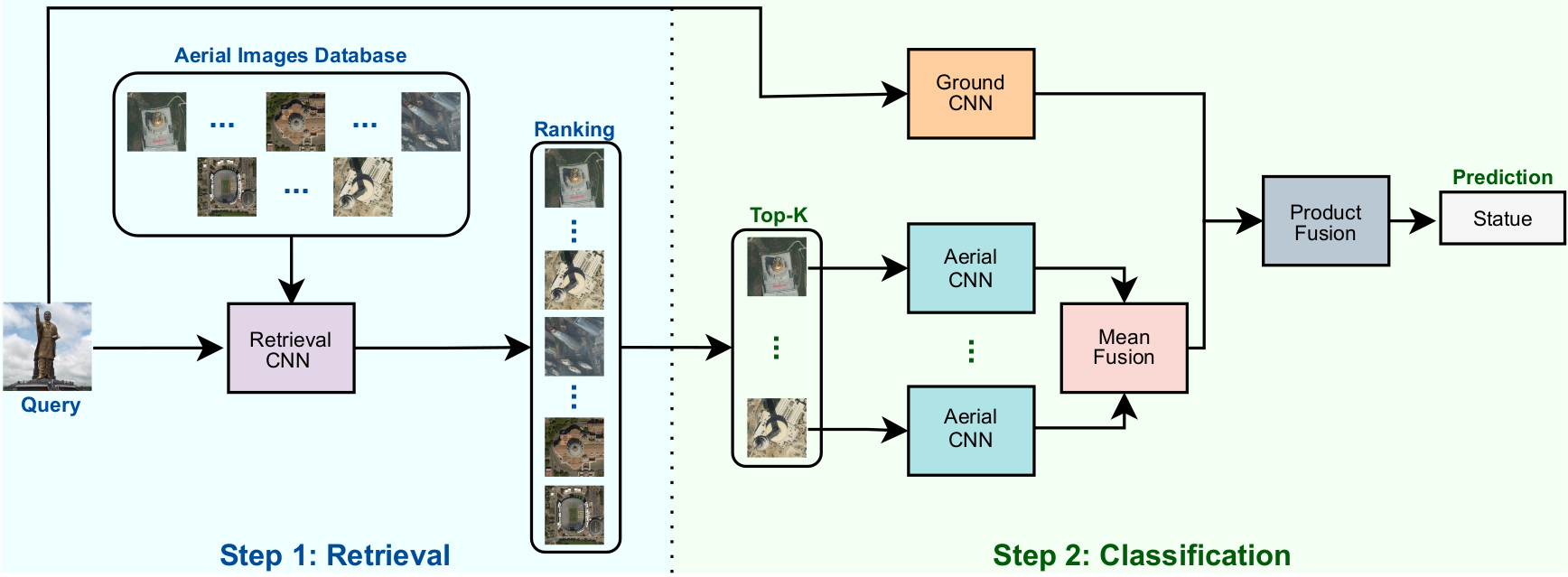

In some scenarios, a single input image may not be enough to allow the object classification. In those cases, it is crucial to explore the complementary information extracted from images presenting the same object from multiple perspectives (or views) in order to enhance the general scene understanding and, consequently, increase the performance. However, this task, commonly called multi-view image classification, has a major challenge: missing data. In this paper, we propose a novel technique for multi-view image classification robust to this problem. The proposed method, based on state-of-the-art deep learning-based approaches and metric learning, can be easily adapted and exploited in other applications and domains. A systematic evaluation of the proposed algorithm was conducted using two multi-view aerial-ground datasets with very distinct properties. Results show that the proposed algorithm provides improvements in multi-view image classification accuracy when compared to state-of-the-art methods. Code available at \url{https://github.com/Gabriellm2003/remote_sensing_missing_data}.

PDF Abstract