Fast and Robust Dynamic Hand Gesture Recognition via Key Frames Extraction and Feature Fusion

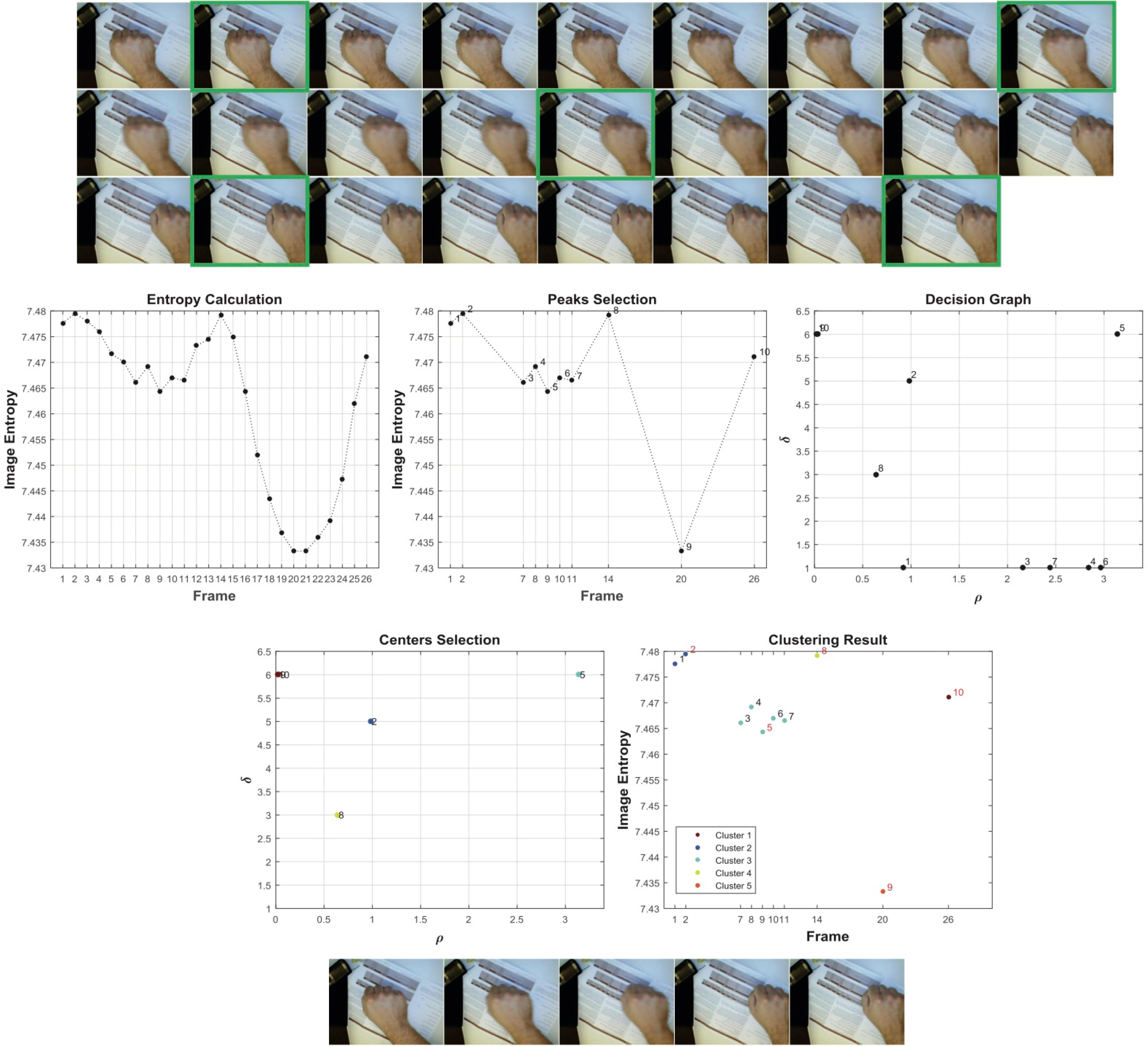

Gesture recognition is a hot topic in computer vision and pattern recognition, which plays a vitally important role in natural human-computer interface. Although great progress has been made recently, fast and robust hand gesture recognition remains an open problem, since the existing methods have not well balanced the performance and the efficiency simultaneously. To bridge it, this work combines image entropy and density clustering to exploit the key frames from hand gesture video for further feature extraction, which can improve the efficiency of recognition. Moreover, a feature fusion strategy is also proposed to further improve feature representation, which elevates the performance of recognition. To validate our approach in a "wild" environment, we also introduce two new datasets called HandGesture and Action3D datasets. Experiments consistently demonstrate that our strategy achieves competitive results on Northwestern University, Cambridge, HandGesture and Action3D hand gesture datasets. Our code and datasets will release at https://github.com/Ha0Tang/HandGestureRecognition.

PDF Abstract

CamGes

CamGes