FC$^2$N: Fully Channel-Concatenated Network for Single Image Super-Resolution

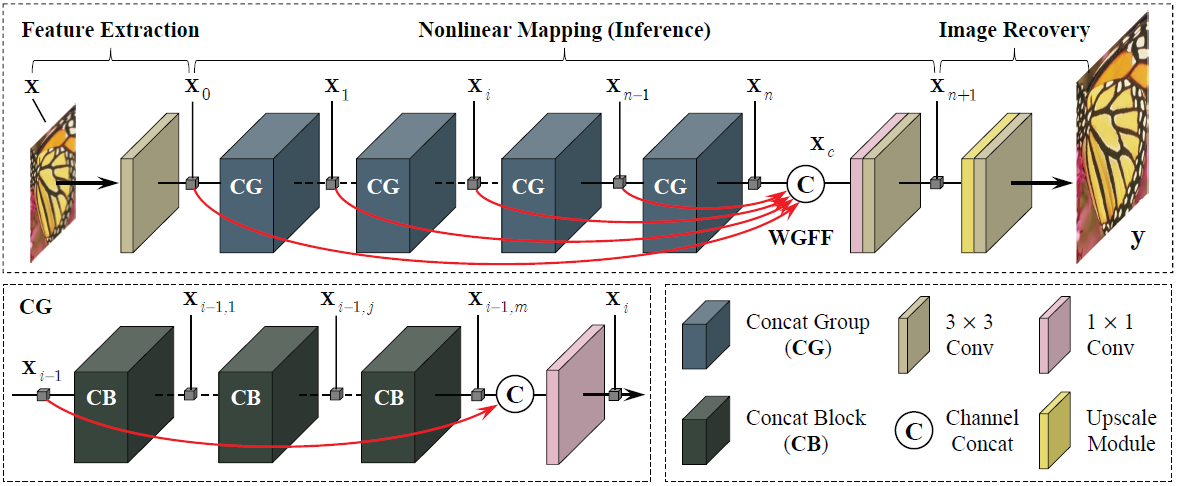

Most current image super-resolution (SR) methods based on convolutional neural networks (CNNs) use residual learning in network structural design, which favors to effective back propagation and hence improves SR performance by increasing model scale. However, residual networks suffer from representational redundancy by introducing identity paths that impede the full exploitation of model capacity. Besides, blindly enlarging network scale can cause more problems in model training, even with residual learning. In this paper, a novel fully channel-concatenated network (FC$^2$N) is presented to make further mining of representational capacity of deep models, in which all interlayer skips are implemented by a simple and straightforward operation, i.e., weighted channel concatenation (WCC), followed by a 1$\times$1 conv layer. Based on the WCC, the model can achieve the joint attention mechanism of linear and nonlinear features in the network, and presents better performance than other state-of-the-art SR models with fewer model parameters. To our best knowledge, FC$^2$N is the first CNN model that does not use residual learning and reaches network depth over 400 layers. Moreover, it shows excellent performance in both largescale and lightweight implementations, which illustrates the full exploitation of the representational capacity of the model.

PDF Abstract

Urban100

Urban100

Set5

Set5

Manga109

Manga109