Feature Quantization Improves GAN Training

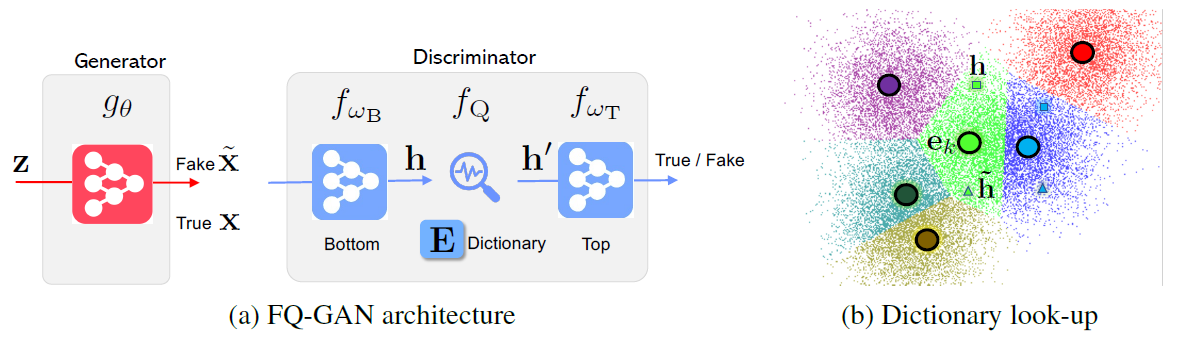

The instability in GAN training has been a long-standing problem despite remarkable research efforts. We identify that instability issues stem from difficulties of performing feature matching with mini-batch statistics, due to a fragile balance between the fixed target distribution and the progressively generated distribution. In this work, we propose Feature Quantization (FQ) for the discriminator, to embed both true and fake data samples into a shared discrete space. The quantized values of FQ are constructed as an evolving dictionary, which is consistent with feature statistics of the recent distribution history. Hence, FQ implicitly enables robust feature matching in a compact space. Our method can be easily plugged into existing GAN models, with little computational overhead in training. We apply FQ to 3 representative GAN models on 9 benchmarks: BigGAN for image generation, StyleGAN for face synthesis, and U-GAT-IT for unsupervised image-to-image translation. Extensive experimental results show that the proposed FQ-GAN can improve the FID scores of baseline methods by a large margin on a variety of tasks, achieving new state-of-the-art performance.

PDF Abstract ICML 2020 PDFCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Image-to-Image Translation | anime-to-selfie | FQ-GAN | Kernel Inception Distance | 10.23 | # 1 | |

| Conditional Image Generation | CIFAR-10 | FQ-GAN | Inception score | 8.50 | # 12 | |

| FID | 5.34 | # 5 | ||||

| Conditional Image Generation | CIFAR-100 | FQ-GAN | Inception Score | 9.74 | # 3 | |

| FID | 7.15 | # 1 | ||||

| Image Generation | FFHQ 1024 x 1024 | FQ-GAN | FID | 3.19 | # 9 | |

| Conditional Image Generation | ImageNet 128x128 | FQ-GAN | FID | 13.77 | # 17 | |

| Inception score | 54.36 | # 16 | ||||

| Conditional Image Generation | ImageNet 64x64 | FQ-GAN | FID | 9.67 | # 3 | |

| Inception score | 25.96 | # 4 | ||||

| Image-to-Image Translation | selfie-to-anime | FQ-GAN | Kernel Inception Distance | 11.40 | # 1 |

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

FFHQ

FFHQ

CelebA-HQ

CelebA-HQ

selfie2anime

selfie2anime