FedX: Unsupervised Federated Learning with Cross Knowledge Distillation

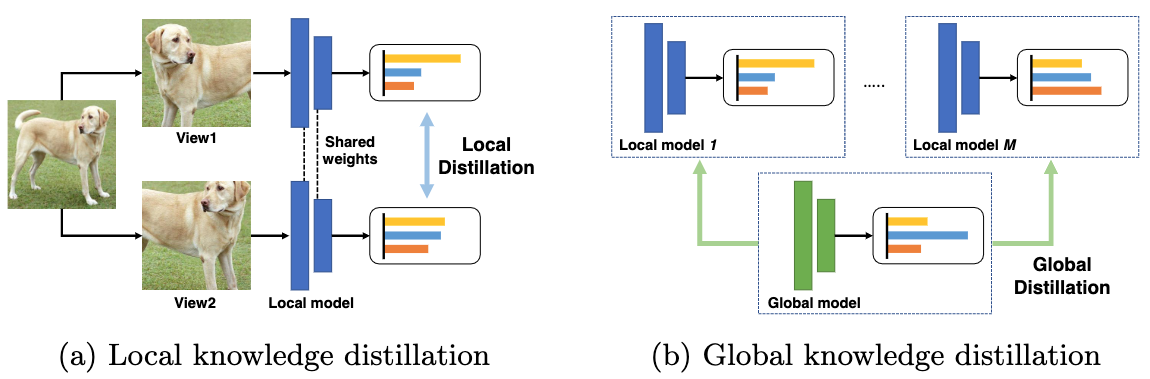

This paper presents FedX, an unsupervised federated learning framework. Our model learns unbiased representation from decentralized and heterogeneous local data. It employs a two-sided knowledge distillation with contrastive learning as a core component, allowing the federated system to function without requiring clients to share any data features. Furthermore, its adaptable architecture can be used as an add-on module for existing unsupervised algorithms in federated settings. Experiments show that our model improves performance significantly (1.58--5.52pp) on five unsupervised algorithms.

PDF Abstract

CIFAR-10

CIFAR-10

SVHN

SVHN