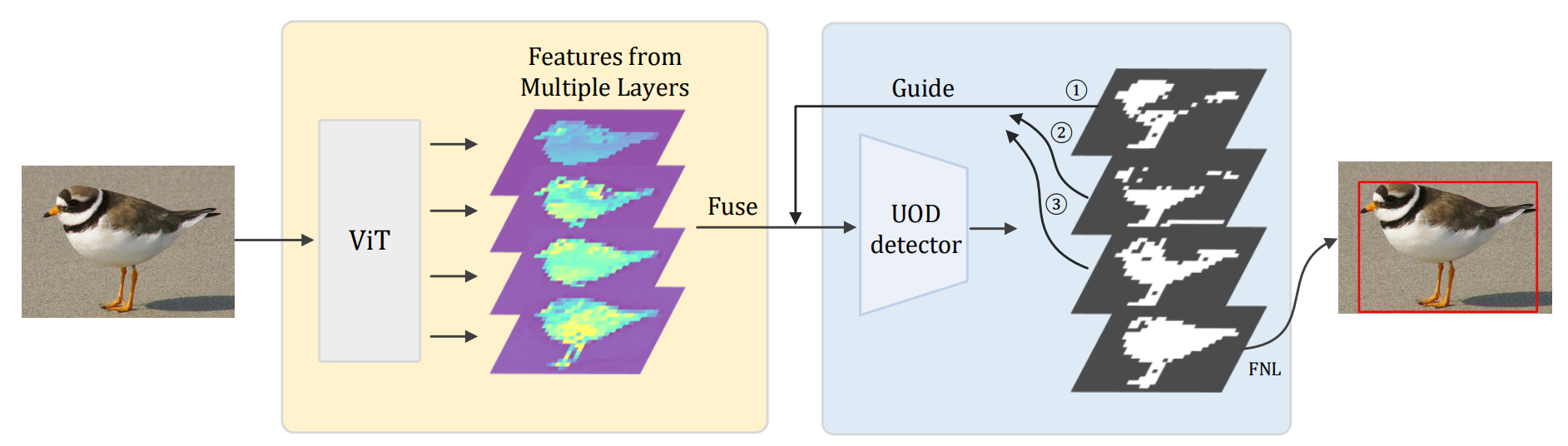

Foreground Guidance and Multi-Layer Feature Fusion for Unsupervised Object Discovery with Transformers

Unsupervised object discovery (UOD) has recently shown encouraging progress with the adoption of pre-trained Transformer features. However, current methods based on Transformers mainly focus on designing the localization head (e.g., seed selection-expansion and normalized cut) and overlook the importance of improving Transformer features. In this work, we handle UOD task from the perspective of feature enhancement and propose FOReground guidance and MUlti-LAyer feature fusion for unsupervised object discovery, dubbed FORMULA. Firstly, we present a foreground guidance strategy with an off-the-shelf UOD detector to highlight the foreground regions on the feature maps and then refine object locations in an iterative fashion. Moreover, to solve the scale variation issues in object detection, we design a multi-layer feature fusion module that aggregates features responding to objects at different scales. The experiments on VOC07, VOC12, and COCO 20k show that the proposed FORMULA achieves new state-of-the-art results on unsupervised object discovery. The code will be released at https://github.com/VDIGPKU/FORMULA.

PDF Abstract

MS COCO

MS COCO