Frame-Recurrent Video Super-Resolution

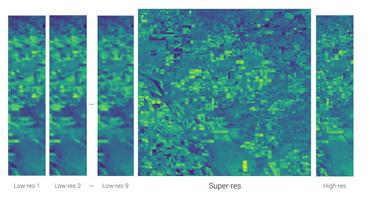

Recent advances in video super-resolution have shown that convolutional neural networks combined with motion compensation are able to merge information from multiple low-resolution (LR) frames to generate high-quality images. Current state-of-the-art methods process a batch of LR frames to generate a single high-resolution (HR) frame and run this scheme in a sliding window fashion over the entire video, effectively treating the problem as a large number of separate multi-frame super-resolution tasks. This approach has two main weaknesses: 1) Each input frame is processed and warped multiple times, increasing the computational cost, and 2) each output frame is estimated independently conditioned on the input frames, limiting the system's ability to produce temporally consistent results. In this work, we propose an end-to-end trainable frame-recurrent video super-resolution framework that uses the previously inferred HR estimate to super-resolve the subsequent frame. This naturally encourages temporally consistent results and reduces the computational cost by warping only one image in each step. Furthermore, due to its recurrent nature, the proposed method has the ability to assimilate a large number of previous frames without increased computational demands. Extensive evaluations and comparisons with previous methods validate the strengths of our approach and demonstrate that the proposed framework is able to significantly outperform the current state of the art.

PDF Abstract CVPR 2018 PDF CVPR 2018 AbstractResults from the Paper

Ranked #6 on

Video Super-Resolution

on MSU Video Upscalers: Quality Enhancement

(VMAF metric)

Ranked #6 on

Video Super-Resolution

on MSU Video Upscalers: Quality Enhancement

(VMAF metric)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Video Super-Resolution | MSU Video Upscalers: Quality Enhancement | FRVSR | PSNR | 27.23 | # 37 | |

| SSIM | 0.936 | # 43 | ||||

| VMAF | 57.14 | # 6 | ||||

| Video Super-Resolution | Vid4 - 4x upscaling - BD degradation | FRVSR | PSNR | 26.69 | # 17 | |

| SSIM | 0.8103 | # 17 |

MSU Video Upscalers: Quality Enhancement

MSU Video Upscalers: Quality Enhancement